The Beautiful Mess 2020

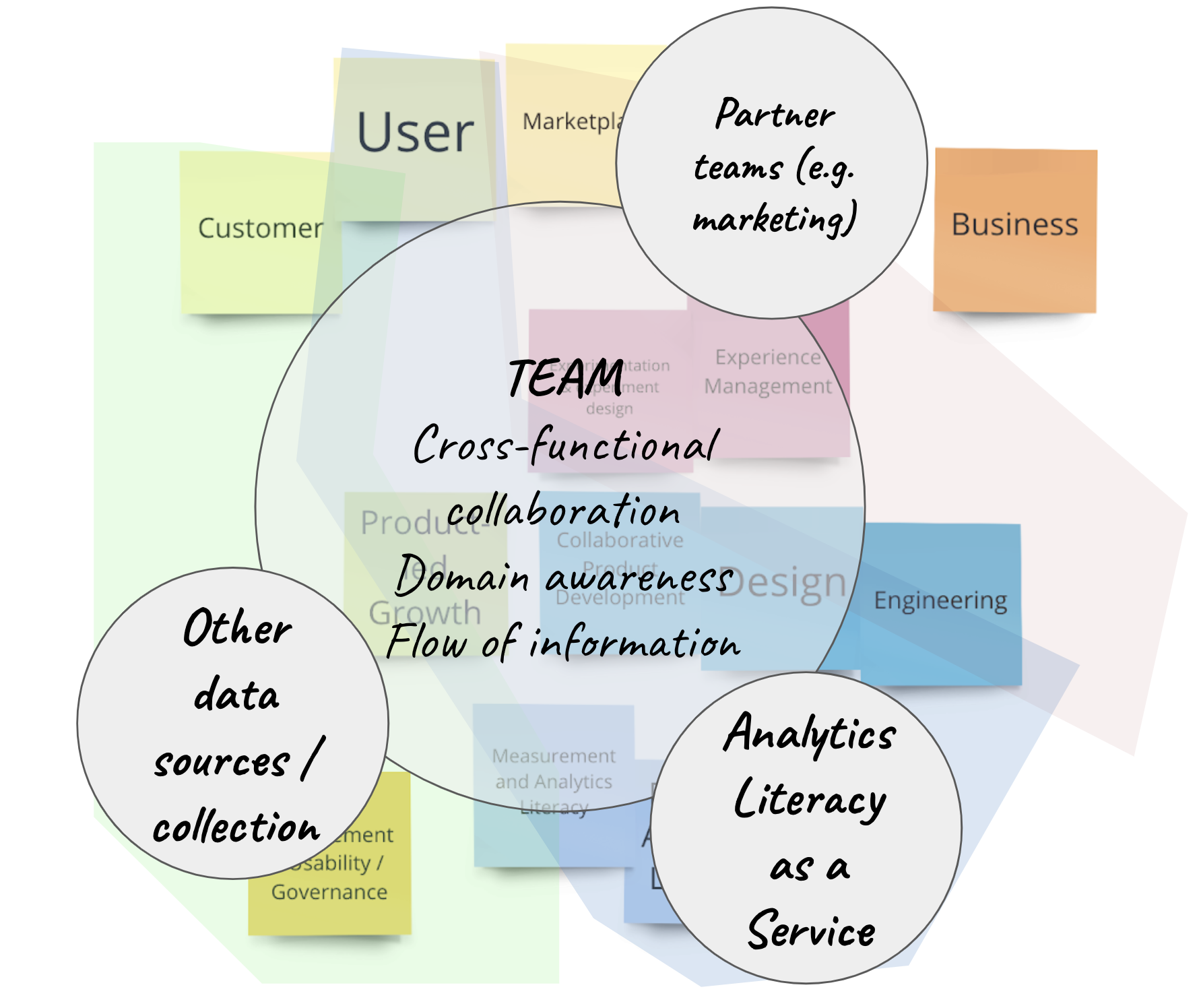

In 2020, I wrote a weekly post about the beautiful mess of product development. Topics covered included product management, design, cross-functional collaboration, change agency, general decision making, continuous improvement, and sustainable approaches to work. I had intended to write a book in 2020, but had to stop. It was too difficult with the pandemic, a toddler, and a full-time job. Meanwhile I kept plodding away at these posts. A friend suggested I release this as "a book", and while I don't consider this to be a truly cohesive body of work I do believe there are some good, actionable ideas here.

Found this helpful?

Leave a tip... or Purchase EPUB/MOBI Versions of Book

Table of Contents

- TBM 1/53: The Empty Roadmap

- TBM 2/53: Three Options

- TBM 3/53: Experiment With Not On

- TBM 4/53: Teach by Starting Together

- TBM 5/53: It's Hard to Learn If You Already Know

- TBM 6/53: Way and Why

- TBM 7/53: Learning Backlogs

- TBM 8/53: Product Initiative Reviews

- TBM 9/53: Some MVP and Experiment Tips

- TBM 10/53: Hammers & Nails

- TBM 11/53: The Know-It-All CEO

- TBM 12/53: Not BAU

- TBM 13/53: Good Idea?

- TBM 14/53: 1s and 3s

- TBM 15/53: Why Can't This Be Like...

- TBM 16/53: The Boring Bits

- TBM 17/53: Measuring to Learn vs. Measuring to Conform

- TBM 18/53: Blank Slates, Seedlings, Freight Trains, and Gardening

- TBM 19/53: Drivers, Constraints, and Floats

- TBM 20/52: Questions and Context

- TBM 21/53: "Vision" and Prescriptive Roadmaps

- TBM 22/53: Attacking X vs. Starting Y

- TBM 23/53: Privilege & Credibility

- TBM 24/53: Mapping Beliefs (and Agreeing to Disagree)

- TBM 25/53: Persistent Models vs. Point-In-Time Goals

- TBM 26/53: Kryptonite and Curiosity

- TBM 27/53: The Lure of New Features and Products

- TBM 28/53: Developer & Designer Collaboration (in the Real World)

- TBM 29/53: Shipping Faster Than You Learn (or…)

- TBM 30/53: Healthy Forcing Functions (and Paying Attention)

- TBM 31/53: Scaling Change Experiments

- TBM 32/53: Beyond Generic KPIs

- TBM 33/53: But Why Is It Working?

- TBM 34/53: Better Experiments

- TBM 35/53: Basic Prioritization Questions (and When to Converge on a Solution)

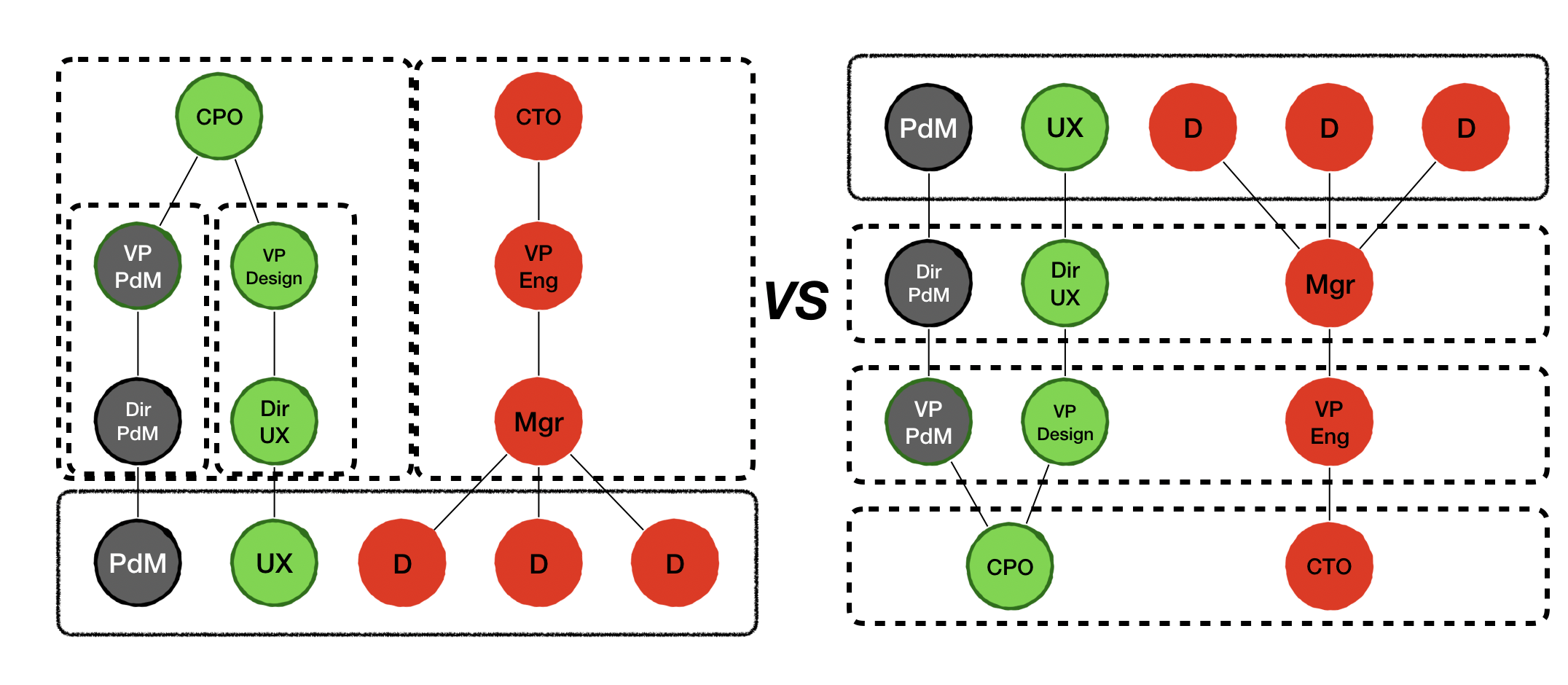

- TBM 36/53: Self-similarity and Manager/Leader Accountability (for Cross-functional Teams)

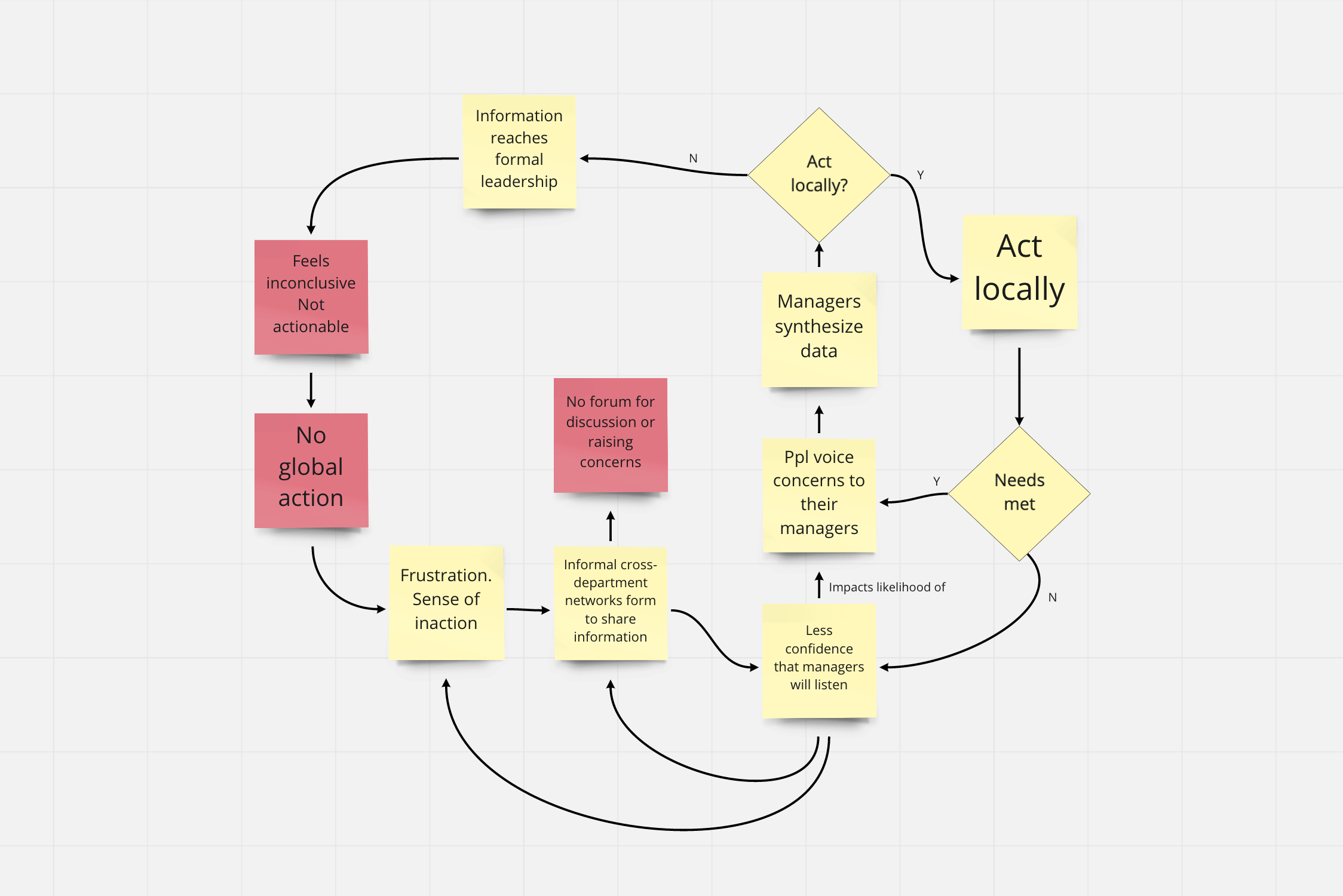

- TBM 37/53: Talk to Your Manager, Take the Survey

- TBM 38/53: Assumptions: Value & Certainty

- TBM 39/53: Neither, Either, While, Without

- TMB 40/53: 10 Tips for Sustainable Change Agency

- TBM 41/53: Strategy and Structure

- TBM 42/53: The Annual Planning Dance

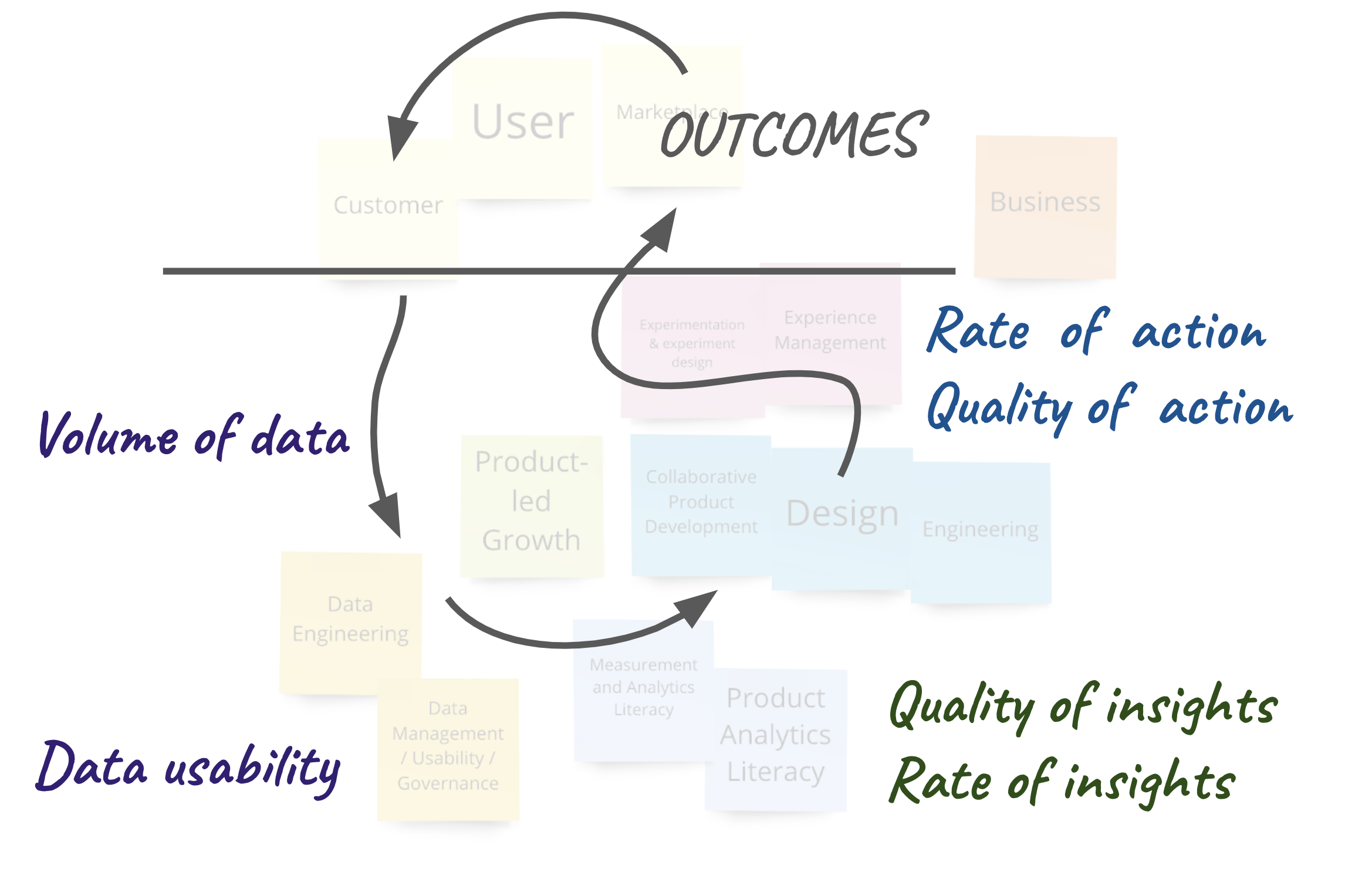

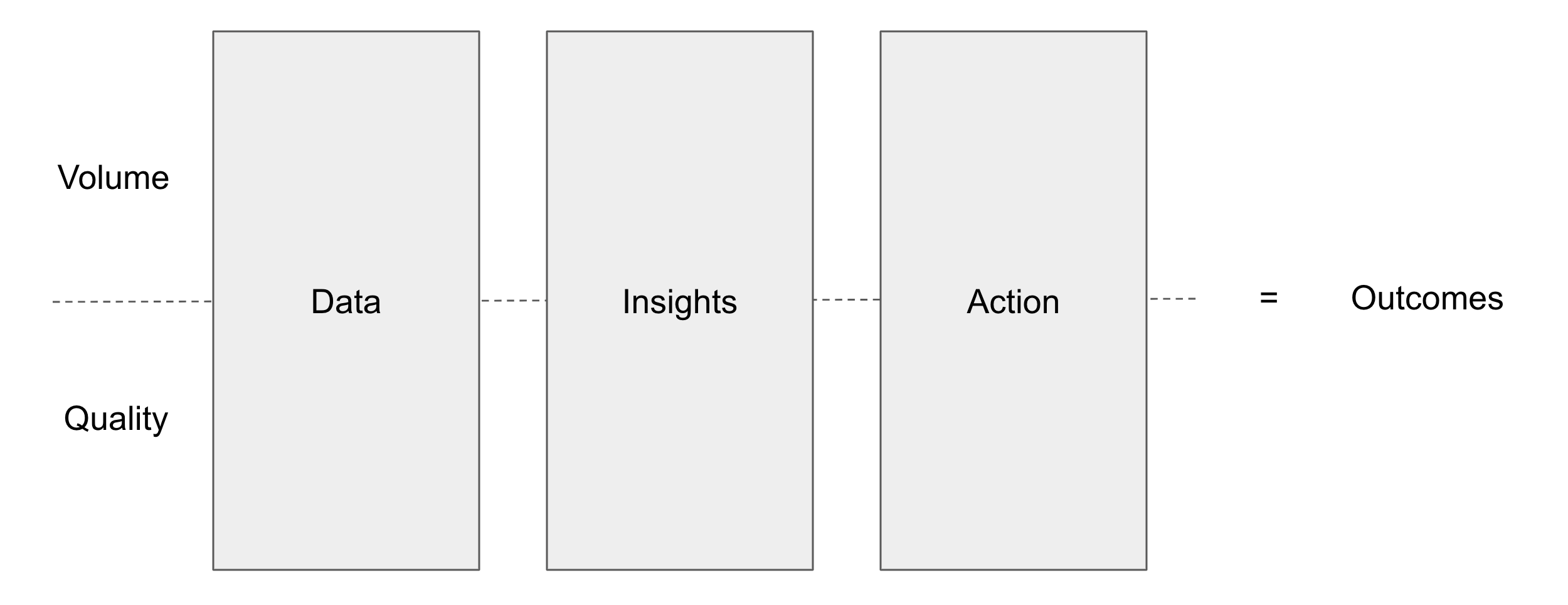

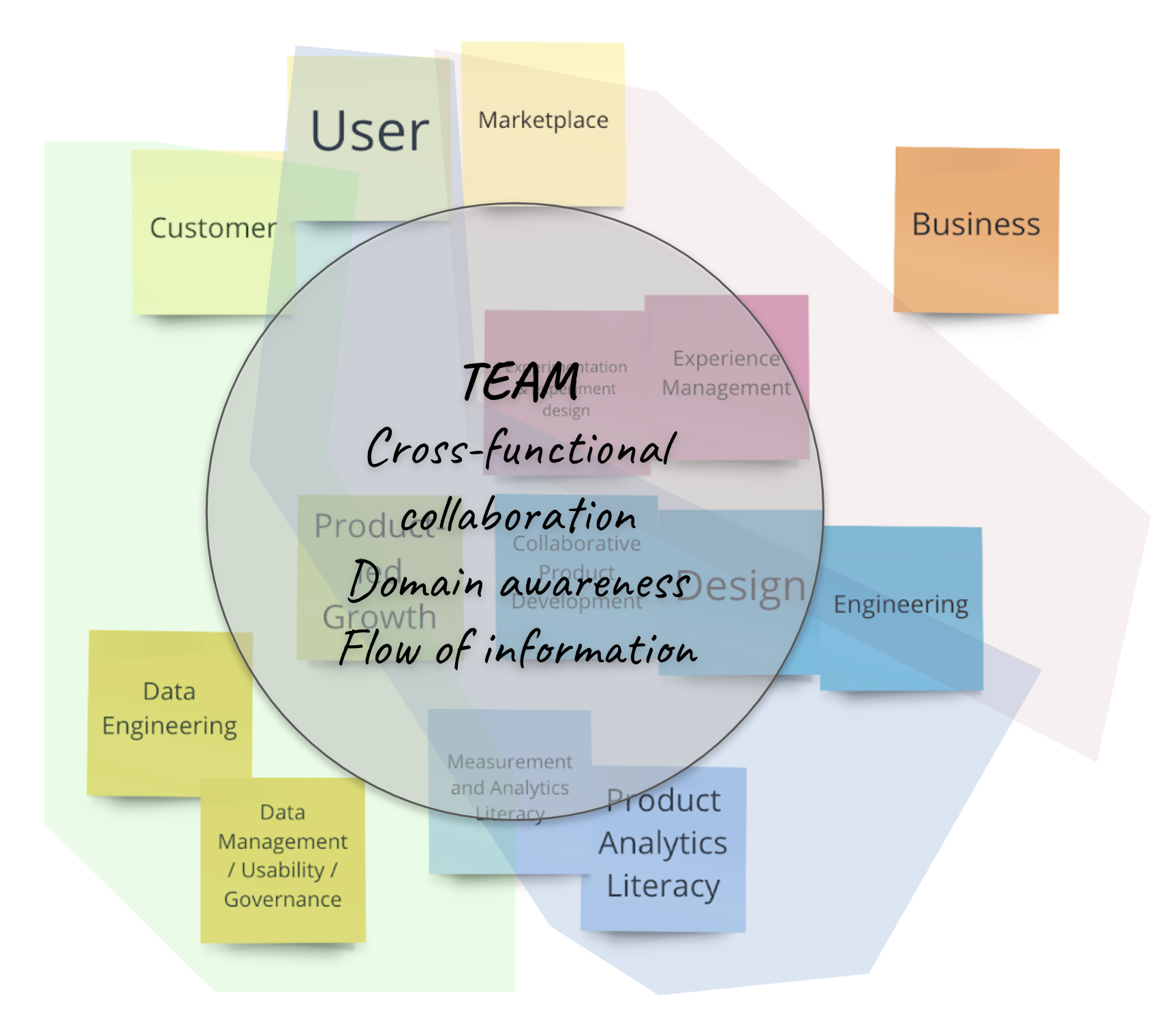

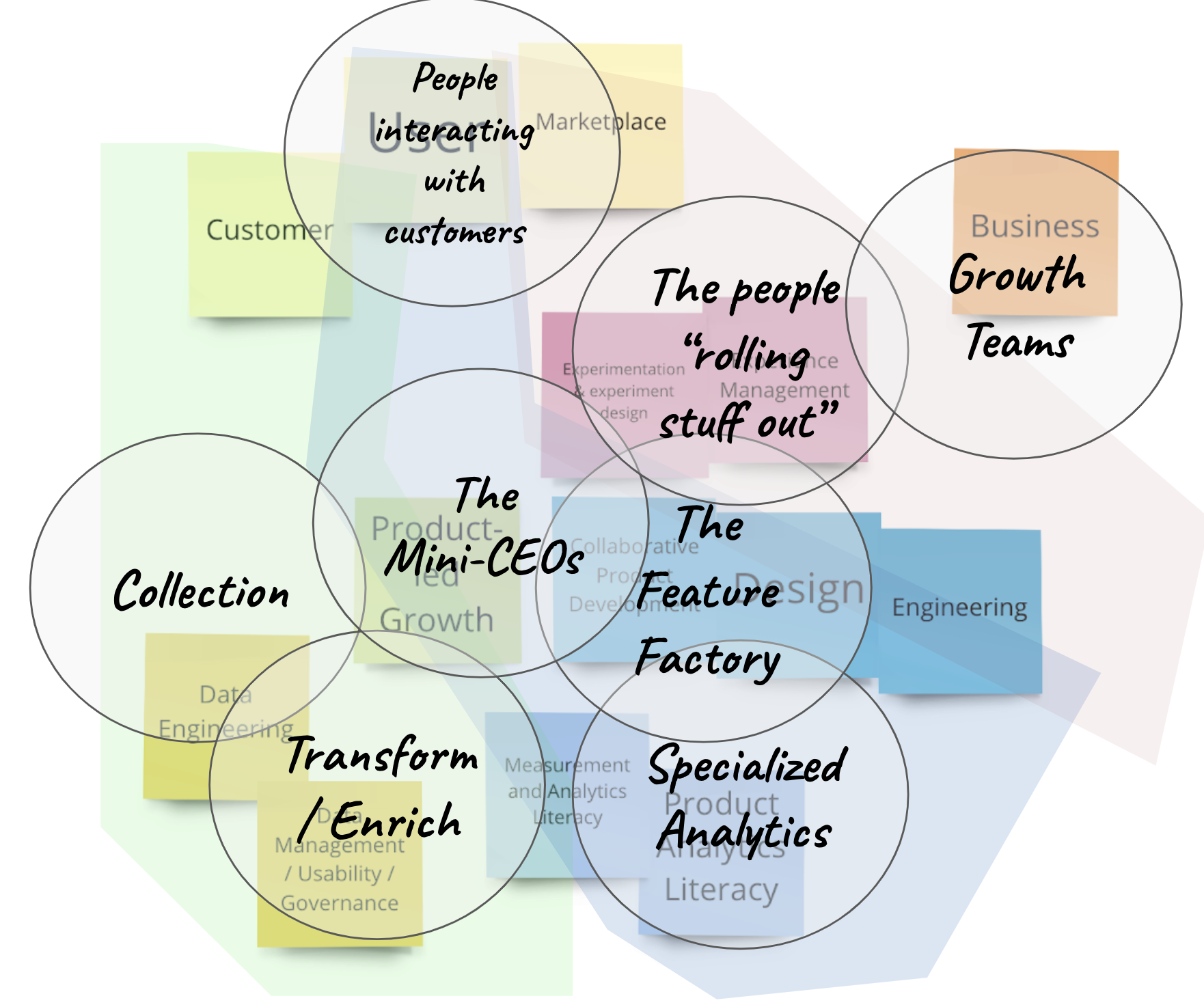

- TBM 43/53: The Product Outcomes Formula®™©

- TBM 44/53: What Is Your Team Stubborn About?

- TBM 45/53: Rank Your 2020 Initiatives (by Impact)

- TBM 46/53: Think Big, Work Small

- TBM 47/53: Thinking Like a Designer/Product Manager

- TBM 48/53: One Roadmap

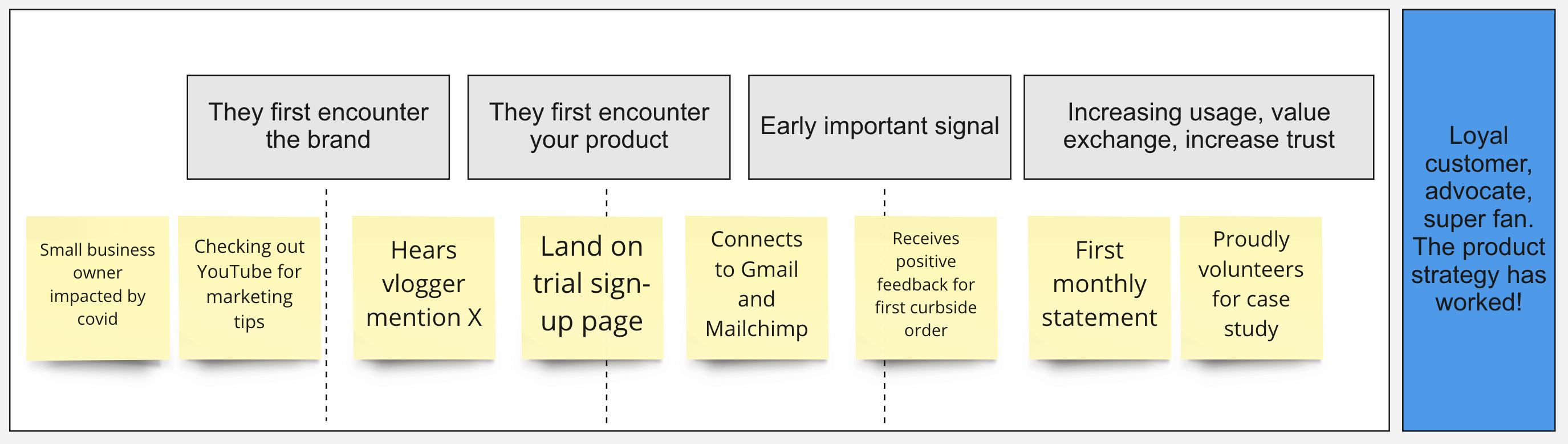

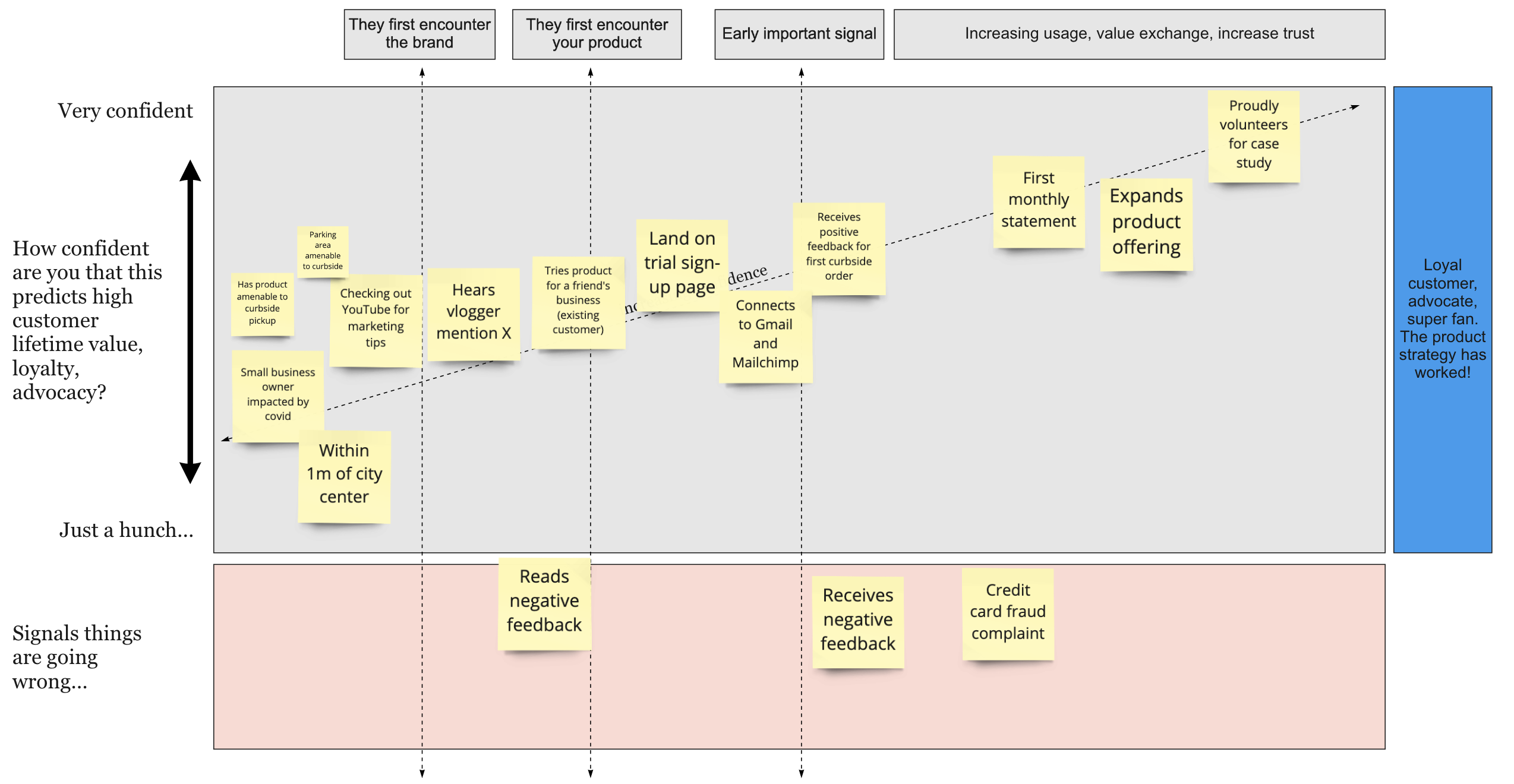

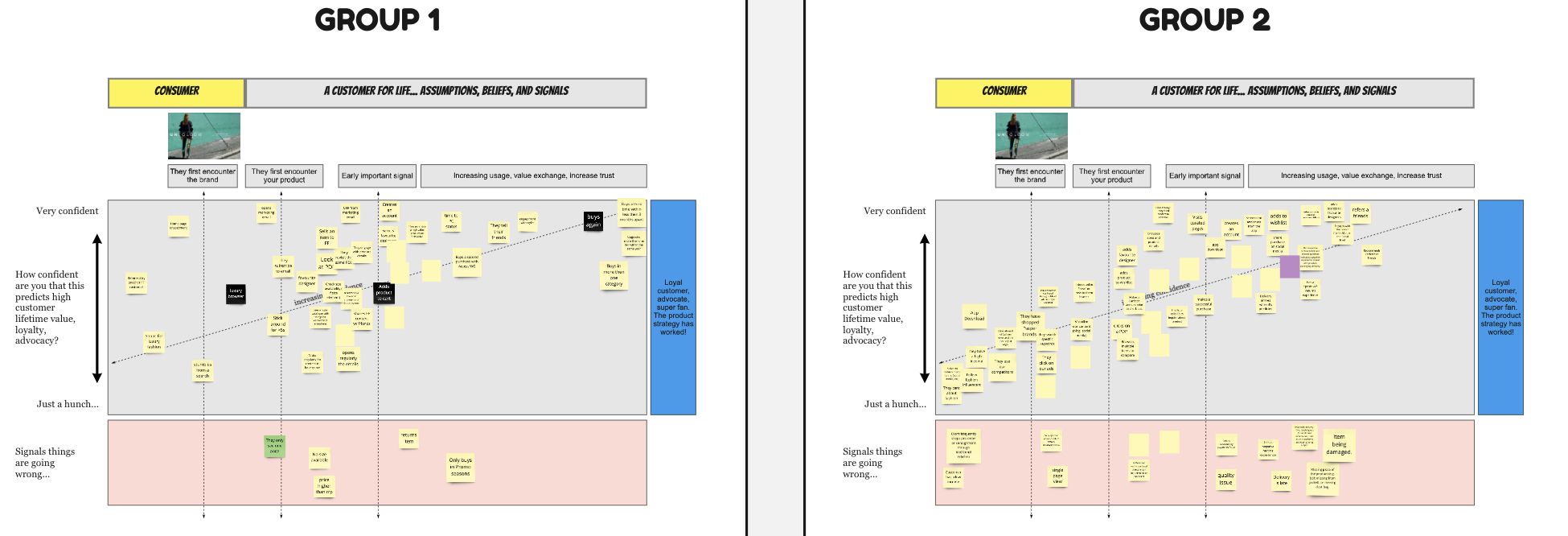

- TBM 49/53: Signals and Confidence

- TBM 50/53: The Curse of "Success" Metrics

- TBM 51/53: Coherence > Shallow Autonomy

- TBM 52/53: Real Teams (Not Groups of One-Person Teams)

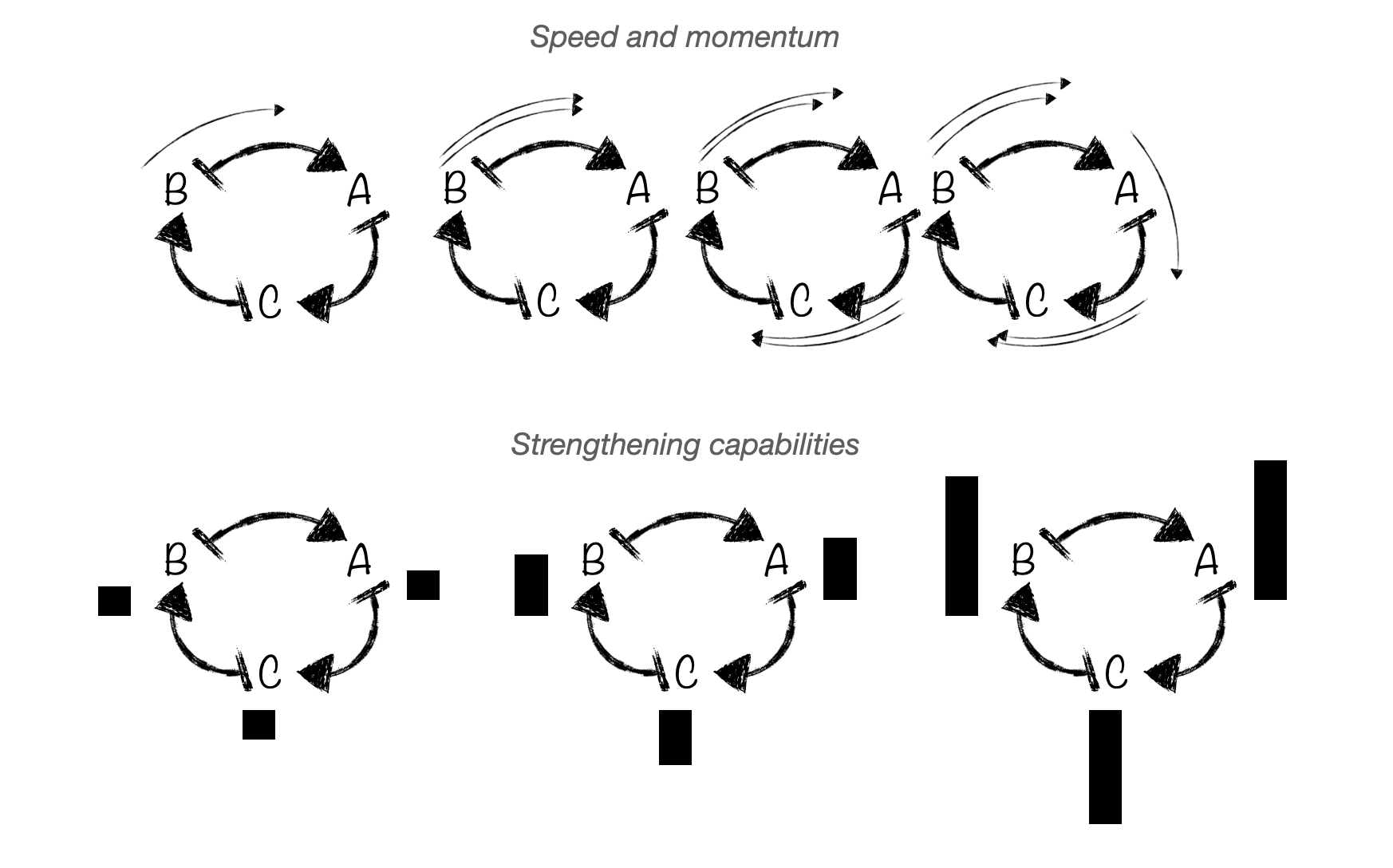

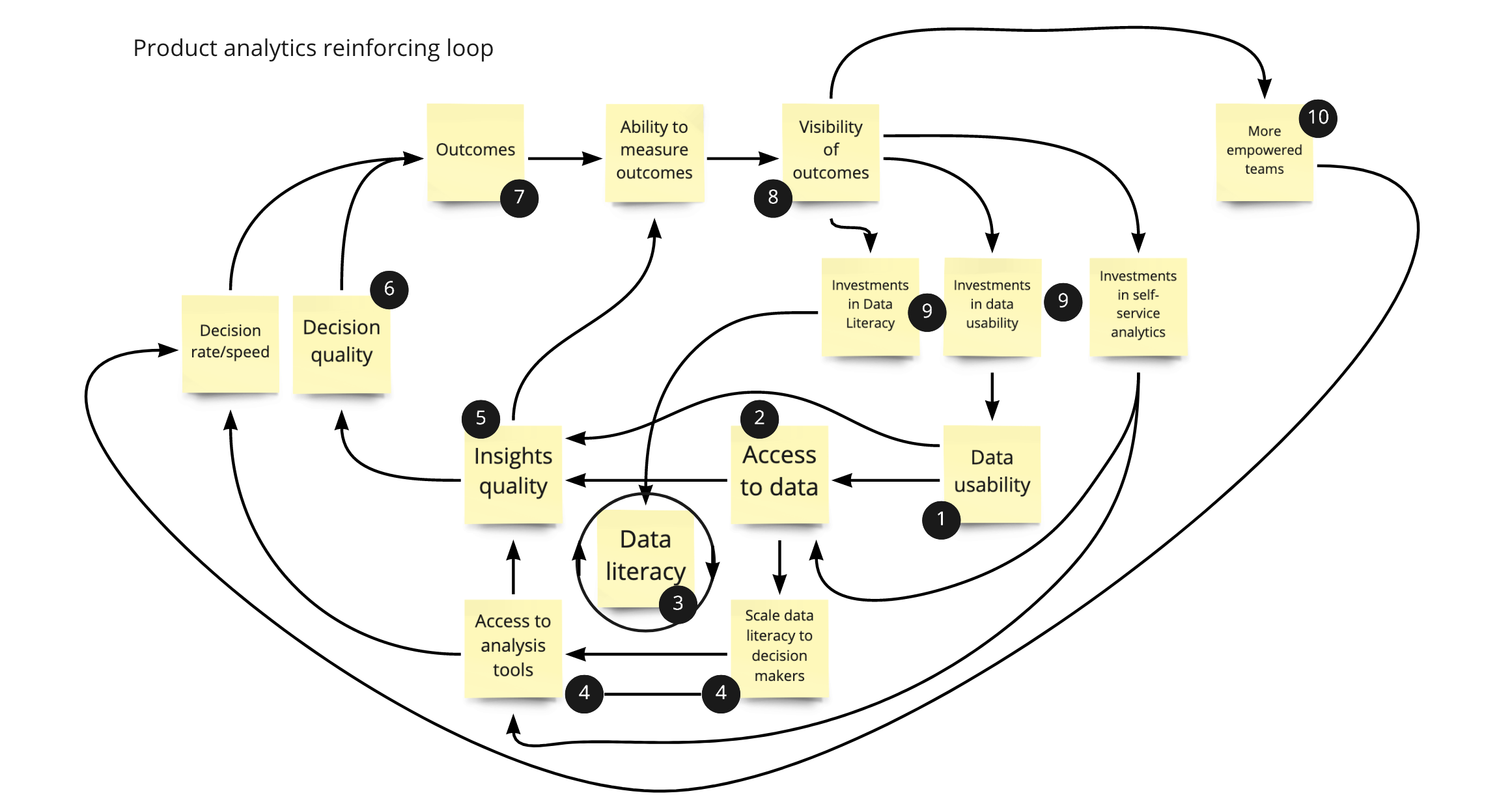

- TBM 53/53: Virtuous Improvement Loops

TBM 1/53: The Empty Roadmap

For the past year or so I have experimented with an activity.

I ask teams to imagine that they stop "shipping". Nothing new. No improving existing features. They keep their jobs, and fix major production issues, but otherwise stay idle. When possible, I ask them to refer to some sort of business dashboard or statement. How do these numbers change? They imagine industry press, coffee machine conversations, customer feedback, and board meetings. What happens with the passing months? Could X possibly go unchanged? Would you be surprised if Y doubled?

The responses are very interesting. It feels unnatural to imagine doing nothing (even if doing nothing may be the best decision). We're wired to keep ourselves busy and talk ourselves into our grand plans. In some businesses, the impacts of doing nothing are felt quickly. In others, teams find they can go a year or more without real "damage". A competitor gains ground. Cost of acquisition increases. Team members leave for a competitor (or to start their own businesses). It hits home that successful products are a slow burn, the result of many decisions over time.

To tackle the exercise, some teams review the past year. How did that work contribute to where the business is today? For new product launches, add-ons, packages, etc. it is easy to say "if we hadn't done this, then X wouldn't have happened." We gravitate to obvious connections, but that is a trap of sorts.

The purpose of this exercise is to surface assumptions, and to focus the team on the Opportunity. Yes, it is "not realistic". It takes some practice, but more than practice it takes freedom and safety. Freedom to explore a possible future without judgement. "Non-business" types (if there is such a thing) need to feel free to explore the problem-space.

Give it a try! With a big cross-functional group. Folks with more familiarity with “the numbers” should be prepared to teach and guide. Folks with more familiarity with the long-term, slow-burn issues (market share, inertia, product phases, ecosystems, etc.) should do the same.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 2/53: Three Options

It may be simple, but the three options prompt is a powerful way to move conversations forward.

In a recent coaching session, A VP of Product was explaining the new strategy . "What three options did you decide against and why?" I asked. I assumed they had other coherent options that didn't make the cut. "Oh, I'd have to think about our meetings. For the last couple weeks I've been in pitch mode with the strategy we decided on," she replied. She showed me the presentation. It popped . But...no mention of the options she had considered and rejected.

This was a missed opportunity. Why? Explaining rejected options provides important context. It conveys rigor and decision making integrity. The criteria and thought process becomes more understandable. Having one option is scary. Sometimes one option is just the middling, mash everything together, have no real perspective grab-bag option. It was the best mediocre option. Or a desperate path.

Imagine you are a new product designer or product manager at that company. You join the a couple days after the launch of big bold strategy . You have all the regular questions. Good questions. Someone sends you a link to VP of Product's presentation. "OK. This makes sense but how about....?" You're missing so much context.

A great second and third option inspires confidence. It means the team made an actual decision. The VP of Product added a couple slides. It made a big difference.

Consider documenting:

What options did you consider but ultimately reject? Why? What might change that would inspire the team to reconsider those options? Walk through your assumptions. Describe your confidence levels. When will you revisit the current selected option?

Three options is also great for finding the right resolution for a team mission. Can the team identify at least three different ways to achieve the goal? If the team is tacking on a goal for outcome theater, well...the goal isn't very helpful. Call the kettle black and build X. That's your bet. Did it work?

We define problems and opportunities to inspire creative problem solving and aligned autonomy. Say a team brainstorms potential solutions and has fifty options. That might suggest that the playing field is too broad. Suggest the team narrows it a bit. If you find the right opportunity resolution you'll have fewer compelling options.

This a great test for OKRs. If the objective (the O) is Deliver X, well you have no other option. Not a great OKR. Unless you really need to Deliver X. That’s ok in some cases.

Reflecting, this is one of those things that we know but forget. When it's done to us, we feel uneasy. And then make the same mistake ourselves. This is your reminder! Describe the three 2020 strategies NOT pursued. And when you frame the next problem for your team, ask yourself if you can dream up a handful of diverse options.

Oh. Remember. One option is always to do nothing. Who knows, maybe the problem will fix itself. Doing nothing is the ever-present option #4.

Thank you so much for checking out the series. I appreciate the opportunity to change up my approach to sharing content. Based on feedback, I'll change BM to TBM. As a new parent I can relate.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 3/53: Experiment With Not On

Over the years, I have encountered many process experiments. Sometimes I have been the one doing the experimenting, but most of the time I (and my team) have been the test subject. Someone (often more senior, but not always) has an idea about how to improve something. And that something involves my team.

Being in the thick of that dynamic can be difficult. It can be hard to tell what is really happening. At times, it can feel like you are being experimented ON.

In my coaching calls at the Amplitude, I observe this dynamic with the benefit of some emotional distance. Someone is planning an experiment, or is in the midst of an experiment. Someone is grappling with a coworker's experiment. Or both. Most of the time, it isn't called an experiment . Rather, it's the new process or new way we're doing things .

In these calls, I've noticed a common pattern. First, the Why is missing, unclear, or lacks focus. And second, the people involved are not invited as co-experimenters.

There's a huge difference between:

OK. So here is the new OKR process. OKRs are a best practice, and management thinks they'll be a good idea.

or

Leadership has decided on the new success metric. Here it is.

and

In yesterday's workshop, we decided to try [specific experiment] to address [some longer term opportunity, observation, or problem] .

We described the positive signals that would signal progress. They include [ positive signals] . We described some signals to watch out for. We agreed that if anyone observes [ leading indicators of something harmful or ineffective] , they should bring that up immediately.We agreed to try this experiment first over [ other options] because [reasons for not picking those options] . Those were good options, and we may revisit them in the future.

[Names] offered to be practice advisors. They've tried this before, so use them as a resource. With your permission, I'm asking [Name] to hold us accountable to giving this a real shot. They aren't directly involved in the team, and they are unbiased.

We noted that this is a leap of faith. It isn’t a sure thing. We may very well experience [challenges] in the short term. Let's make sure we support each other by [tactics to support each other] .

In a quarter, we'll decide whether to pivot or proceed. If we proceed, we'll work on operationalizing this, but that is not a given. As we try this, consider opportunities for future improvements.

Does this sound right to everyone?

The difference is stark. Yes, the second approach takes longer (at first, and maybe not, see below). Yes, it is more involved and messy. But let's face it: no one likes being the subject of random experiments. Even CEOs.

The second option is powerful and resilient. The first options are fragile.

There's another benefit here. I mentioned that the first option takes longer. Even that is debatable. People are more likely to give experiments a shot when the Why is clear, and when they get involved. But they don't necessarily need to design the experiment (though I think that can help). The experiment has a beginning, middle, and end. What's the worst that can happen? You go a quarter and recalibrate.

So I would argue that the second option — especially if you build up a track-record of keeping your promises — can actually be faster, and make it easier to get buy-in.

That’s it for this morning. I hope these short posts are helpful. Good lesson for me: putting toddler to sleep often means dad falls asleep. Cutting this post close!

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

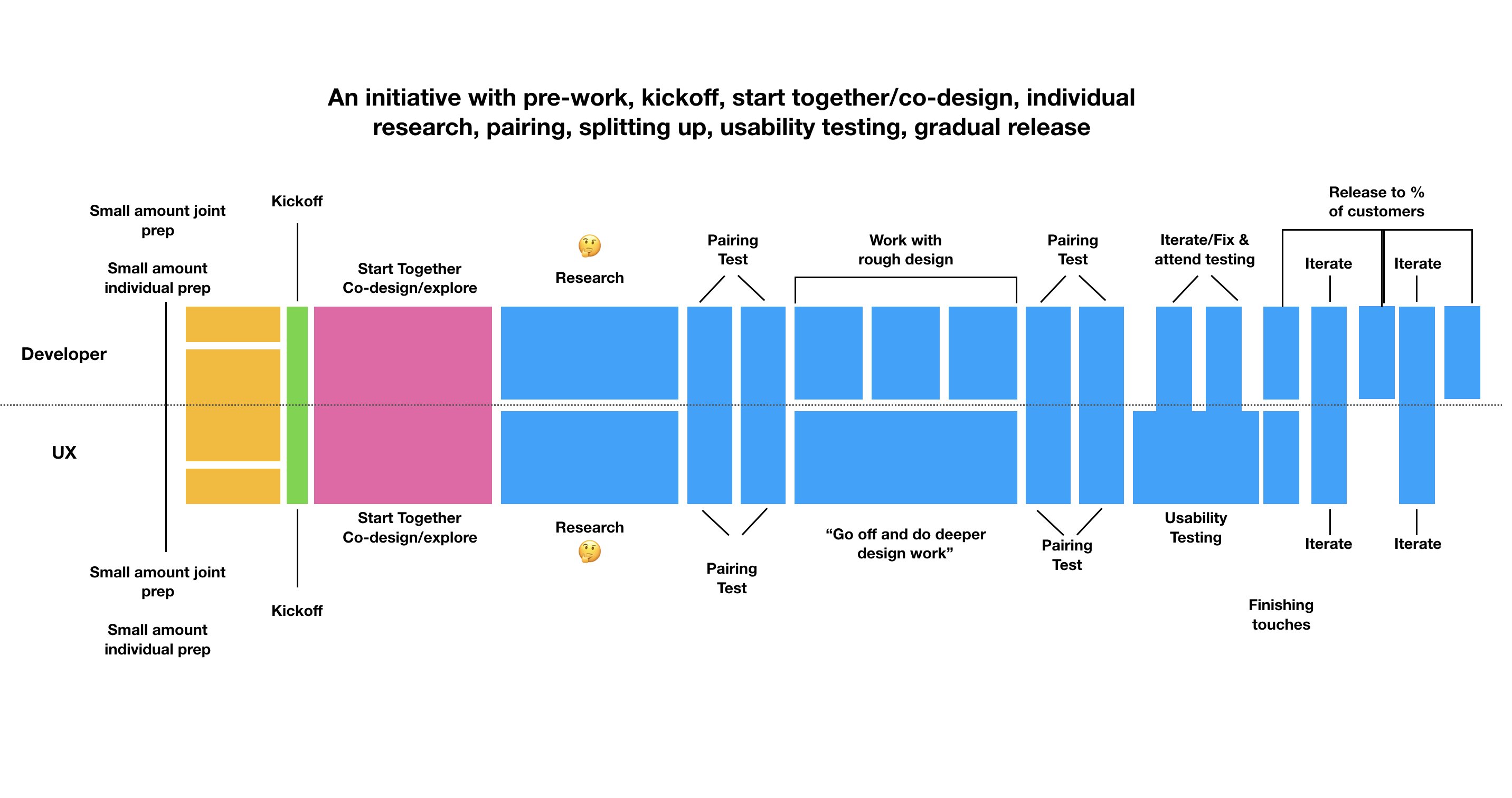

TBM 4/53: Teach by Starting Together

I am a big advocate for starting together as a whole team.

What is the opposite of starting together?

Most of the time it means doing early work on an initiative in a smaller group while the team focuses on delivering something else. The small group tends to be more senior/experienced. At the "right time", the official kickoff begins. Someone describes the work and the research, and the team starts working.

The small group approach tends to be more efficient in the short term. Meetings are easier to schedule and run. The conversation flows. Conflict is easier to tame and temper. "Fewer people are sitting around." There's less scrutiny. For roles like UX, starting in advance also carves out much needed space for less structured work. This is all true.

Some people get very nervous when they can't hear people typing (or when they themselves aren't typing). "We aren't paying developers to sit in meetings and play with stickies!" they say. I try to put myself in their shoes. If you've never seen co-design and starting together work, you're liable to settle on what you know: typing = progress.

My main problem with the small group approach is that it impacts learning. Less experienced team members don't get exposure to the discovery and "shaping" process. Seeing the final deck, mockups, or "high level stories" doesn't count. Instead of building product discovery chops across the board, we silo those skills. In my experience, resiliency decreases.

I'll always remember an activity where we paired more junior and more senior developers with a subject-matter expert CEO. Two customers, a Product Manager, and a Designer also attended. A UX Researcher facilitated. The CEO took off his CEO hat (and HIPPO hat). Together they grappled with a messy problem. It was rocky to start, and divergent, but after a couple days -- and fifteen customer calls -- they found their stride. "I remember when we always used to work like that!" said the CEO, "it was always so invigorating. I can see how this will save us time in the long run.”

There was a small group who did the legwork and prep for that effort. But they didn't start the effort without the team. Their primary role was that of context builder.

I understand all the risks here, and completely respect that many teams can't start together (or don't want to, and do just fine).

What I would suggest is an experiment. Some teams are successful with "rotations". They'll invite one or two less experienced team member to participate in discovery or research. The invited team member gets the support of her team, very reduced workload, and commits to show up. When (and if...often this work results in a no-go) the effort "starts", she can act as a bridge to the discovery activities.

Give it a try. Let me know how it went.

I’m confident of one thing. That junior developer or designer (or marketer, or data scientist, or product manager) will learn something.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 5/53: It's Hard to Learn If You Already Know

Recently, I found myself angry because someone was doubting me.

I was explaining one-day sprints , and how that practice had worked for my team in a particular context. Their questions smacked of mistrust (to me, at least). I asked myself “Why don't they trust me? Why are they so pessimistic?”

A couple days later I found myself doubting someone. They were explaining how an accountability framework had worked for their team. I wasn't buying it (though I tried to remain respectful and curious).

Why did I doubt them? I thought they were solving for a symptom, not the actual problem. I also have strong opinions about individual accountability vs. team accountability. The framework they mentioned seemed to favor individual heroics over teamwork. I have firsthand experience with that going very wrong.

Alas, they sensed my skepticism. I don't have a great poker face, and genuine curiosity is hard to fake. Unfortunately, I squandered an opportunity to learn and help.

Working in the beautiful mess of product development is often about suspending disbelief. The challenge is that we're quick to ask others to suspend disbelief, but slow to suspend our own beliefs. We are quick to tout a growth mindset when it is growth we like, but slow to support growth in others that makes us uncomfortable. Quick: first principles when they back us up. Slow: first principles when they challenge us.

I’m reminded of Amy Edmondson's wonderful talk How to turn a group of strangers into a team

It's hard to learn if you already know. And unfortunately, we're hardwired to think we know. And so we've got to remind ourselves -- and we can do it -- to be curious; to be curious about what others bring. And that curiosity can also spawn a kind of generosity of interpretation.

"It's hard to learn if you already know" is a powerful statement, especially considering how people in cross-functional teams often feel it necessary to prove what they know (and defend it).

My intent with these weekly posts was to discuss something actionable. I keep a work diary. Lately, I've been trying to sense that exact moment when the threat response kicks in. And write down what's happening. I also try to keep track of when I'm the one doing the judging. Taking the time to write instead of respond immediately has been very helpful.

Maybe give that a try for a week?

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 6/53: Way and Why

I recently co-wrote a playbook about the North Star Framework . A common question after reading the book is "how do I introduce this framework in my organization?"

The advice I give is the same advice I give for any effort to introduce a tool, pattern, framework, or method.

Don't become the North Star Framework person. Don't become the OKR, design sprint, design thinking, mob-programming, or Agile person. Don't stop learning, of course. Don't stop trying to help using what you know. But don't lead with The Way.

Why? Because you run the very real risk of being forever pigeon-holed as The Way person. Dismissing and discounting The Way is easy. Now you have a horse in the race. Now you're the gullible one who thinks that a framework will fix everything. Now you're the person imposing your way. The Way is More Process. Even if none of this is true, you'll have to fight an uphill battle because it is easy to misconstrue intent.

I'll always remember receiving this feedback:

It feels like you are more concerned with us doing X than us figuring out how to to fix the problem.

That stung. Internal dialogue: Why can't they understand I am trying to help! But I could see their point. With roles reversed, I'm often the one who resents being told The Way. I like to figure it out for myself.

What should you do instead?

In my experience, it is more effective to lead with The Why -- the opportunity, problem, or observation. And whenever possible, it is more effective to speak for yourself instead of a vague "we" or "some people". For example, the North Star Framework addresses the need for “more autonomy, with more flexibility to solve problems, while ensuring my work aligns with the bigger picture.”

When you lead with The Why it is easier to test the waters. Do your teammates actually share your need and perspective? Do they see the problem a different way? Do they have ideas on how to help in that area? Is another opportunity or problem more important to address now?

The actionable thing to try is to experiment with distancing yourself from The Way, and focus on The Why. Start there, even when you are eager to solve the problem.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 7/53: Learning Backlogs

Imagine we're mulling over some improvements to a workflow in an B2B SaaS product. We have some questions:

- What is the best way to present workflow options A,B, and C?

- What is the right copy to describe these options?

- Are workflow options A, B, and C the right options? Hold on!

- Will adding options to the workflow have any effect whatsoever on campaign efficacy? Is there a chance that they (the options) will actually reinforce anti-patterns?

- Some high ARR customers are requesting these workflow options. But is there something we're not seeing? Is there a way to change the game completely such that this workflow would be unnecessary?

- There are six similar workflow across the app. Is there an opportunity here to optimize how we show workflow options? Is consistency even important?

- Is our approach to executing workflow logic sound? Is there an opportunity to do some refactoring? How will we store this information? Do we needed a history of field value changes?

- What are these campaign managers actually trying to achieve here? Can we lump all campaign managers in one boat?

- What does quality mean for us here?

Note how these questions address different opportunities and areas of risk . Some seem to underpin the whole effort and scream "answer me first!" Some question the effort itself. Some are important, but we can safely delay answering the question. Some seem easy to answer, while others feel more difficult to answer. Even the less-than-clear questions are signals that we need to do more exploration.

(Tip: Try a quick activity with your team. Brainstorm questions relevant to your current effort or an up-next effort)

For each question we try to provide a bit more context. Sometimes we can answer questions with 100% certainty. Even then, answering those questions inspires a bunch of more difficult questions. So it is important to consider what chipping away at these questions might do for us. To do that, we add more detail for each question:

- Reducing uncertainty here will allow us to __________ .

- The value of reducing uncertainty here (being less wrong) is __________. The cost of being wrong is __________.

- Progress towards reducing uncertainty here will look like __________. Our goal would be to be able to __________.

You get the idea. Try it. How does this help?

First, as a team we are able to sequence the questions. Which questions should we address first? Why? What research do we already have? Is it enough? Maybe we have a super important question with tons of uncertainty. Maybe a tiny bit of progress could be very valuable. This is an important conversation to have. Since we can only tackle so much at once, it also helps us focus our efforts.

With a sequenced list you can create a board to track these, limit research-in-progress, and expand on tactics. Which leads us to…

Second, it lets us converge on what we must learn first , before getting caught up in debates about how to learn. This is very important. It is easy to get caught in a battle of approaches to research or "testing" (e.g. "we should just run an an A/B test there" vs. "we can figure that out with some good qualitative research"). When we focus on that battle, we tend to forget The Why. Is this even the most important thing to focus on? What does progress look like in terms of reducing uncertainty? Once The Why is squared away, it leaves room for professionals to make the call in terms of the best way to make progress.

Teams often don't work this way. Instead, it is about pitching the answer and/or communicating certainty. Or getting caught in analysis paralysis trying to be certain about all the things. Or fighting to "do research" without any clear sense of how those research efforts fit into the bigger picture.

So...start with the learning backlog.

If the word backlog freaks you out… it’s OK to call it a to-learn list.

Maybe do this as part of a kickoff.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 8/53: Product Initiative Reviews

Shorter post today.

Run product initiative reviews.

You can do reviews even for prescriptive, feature-factory-like work. Someone had an outcome in mind, even if it wasn’t stated explicitly. What happened? What did you learn? Did that “no brainer, we know we have to build this” feature have the expected impact?

You can also do more general learning reviews. What has your team learned recently? It is ironic that some high % of teams do retrospectives for their team. (process, output, blockers, etc.), but not retrospectives for their work.

The key here is vulnerability. Present as a team. Make sure to admit your challenges, and give a balanced assessment. Don’t play the blame game. Don’t do success theater. Invite other teams and people from across your organization. This is where you can have great impact. It sends a great message to other teams.

I met with a team that was contemplating a big re-org — real product teams, etc. “What is happening right now?” I. asked, “is anything getting shipped, and is it having any impact?” Yes, stuff was getting delivered. But no, no one had any sense whether it had any impact. That would be a good place to start, right? The new structure will not magically solve that problem.

OK. Makes sense. Easy enough. But you’re so busy !

And this is the beauty of reviews.

Just be showing up you’ll be ahead of 75% of other teams.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 9/53: Some MVP and Experiment Tips

Note: This week’s newsletter presented a dilemma. Yesterday, I wrote some tips for a teammate and got a bit carried away. I figured the tips might be more broadly helpful, so I published on cutle.fish . And then I realized I should have waited to send this out with the newsletter. In the spirit of pacing myself, I am going to cross-post instead of writing two posts. Apologies if you’ve already seen this.

I sometimes find myself emailing/sharing advice lists. Here is one related to experiments (used somewhat interchangeably with MVP) that I sent out today. It is not about experiment design. Rather, I focus on a situation where a team is spinning up a lot of experiments (for various reasons) and is encouraged to experiment, but may be struggling with making it all work.

- Learn early and often. We should not be afraid to try small experiments to learn, and we should not be afraid to release things early and often to gather feedback and iterate. A good rule of thumb is that you should release before you are comfortable, and make sure you are prepared to learn. Our ideas may seem precious, but it is critical (and humbling) to get things into the world. Challenge big batches of work like crazy. Can we achieve 90% of the outcomes with 10% of the work? Can we learn 90% of what we need to learn with 10% of the work? Or nothing “shipped” at all? Do we have the requisite safety to embrace “failed” experiments?

- Going “faster” . There are only two real ways to go faster…reduce the size of “batches” and/or do less at once. Adding people tends to make things slower in the near-term (and sometimes the long-term). Busy-ness does not equal flow. For that reason, really plan on focusing on your experimentation efforts. Limit your experiments in progress. Our goal is high cadence not high velocity (there is a difference...imagine a cyclist going up a hill in an “easy” gear vs. a “hard” gear).

- Partner. Having partners in your experimentation efforts is critical. They help you de-bias, help you challenge your assumptions, and help you hold each other accountable to working small and learning quickly.

- Take experiments seriously. Be diligent about framing your MVPs and experiments. How will you measure this effort? How will you reflect on progress? What are your pivot and proceed points?

- Consider blast radius. We should be open to the idea that we cannot control everything, and that on a daily basis there’s a ton going on that we will not know about. Someone might do something that impacts your world, and that is OK . Assuming positive intent is critical. That said, for the person running the experiment it is vital to be sympathetic to the blast radius of your work (and perception of your work). Communicate. Give people some notice. And commit to points #7-10 below.

- Kill Your MVPs. A good rule of thumb is that you should be able to kill your MVP. It should not create promises or commitments. It should not create dependencies. It is largely a throwaway vehicle for learning. The risk is a million MVPs that just create cognitive overhead, high cost to maintain, and serve as a distraction for the team. Consider this. If there’s not a 50% chance of your MVP “failing”, there’s a good chance you aren’t taking enough risk to learn new things.

- No side-channels. Be cautious about creating a “side-channel” of unofficial work --.the stuff you really want to be doing, but you are battling business as usual. Why? 1) You’re keeping your teammates in the dark, and 2) you will burn out! There are only so many hours in the day. How does this relate to experiments? Try to elevate your experiments to first-class visible work. Ask teammates to hold you accountable.

- Leave room to incorporate learning. Pushback again MVPs is often rooted in pragmatic fear. People never feel they are able to go back and refine what they release (integrate learnings, refine, etc.) So they increase scope as a form of craft-preservation. By creating a large batch of work, we assure ourselves some ability to get it right. What to do? The lesson here is that MVPs should be considered as an integral part of a larger stream of value creation. They are not an excuse to cut corners and bail on the effort. The goal is to generate learning and INCORPORATE that learning...not to ship and move on leaving a ton of unrefined work. Key insight: advocate for the larger value stream and learning objective first. After that, running experiments is easy/easier.

- Not a workaround. When we feel thwarted (perhaps other people are super busy and can’t assist), it is tempting to spin up individual work that we can completely control. This is natural and very tempting as we are wired for forward momentum. But without a structured approach to learning you run the risk of just making yourself more busy, adding even more work in progress, and potentially working in opposition to your teammates. Instead, is there a way to help unblock your teammates?

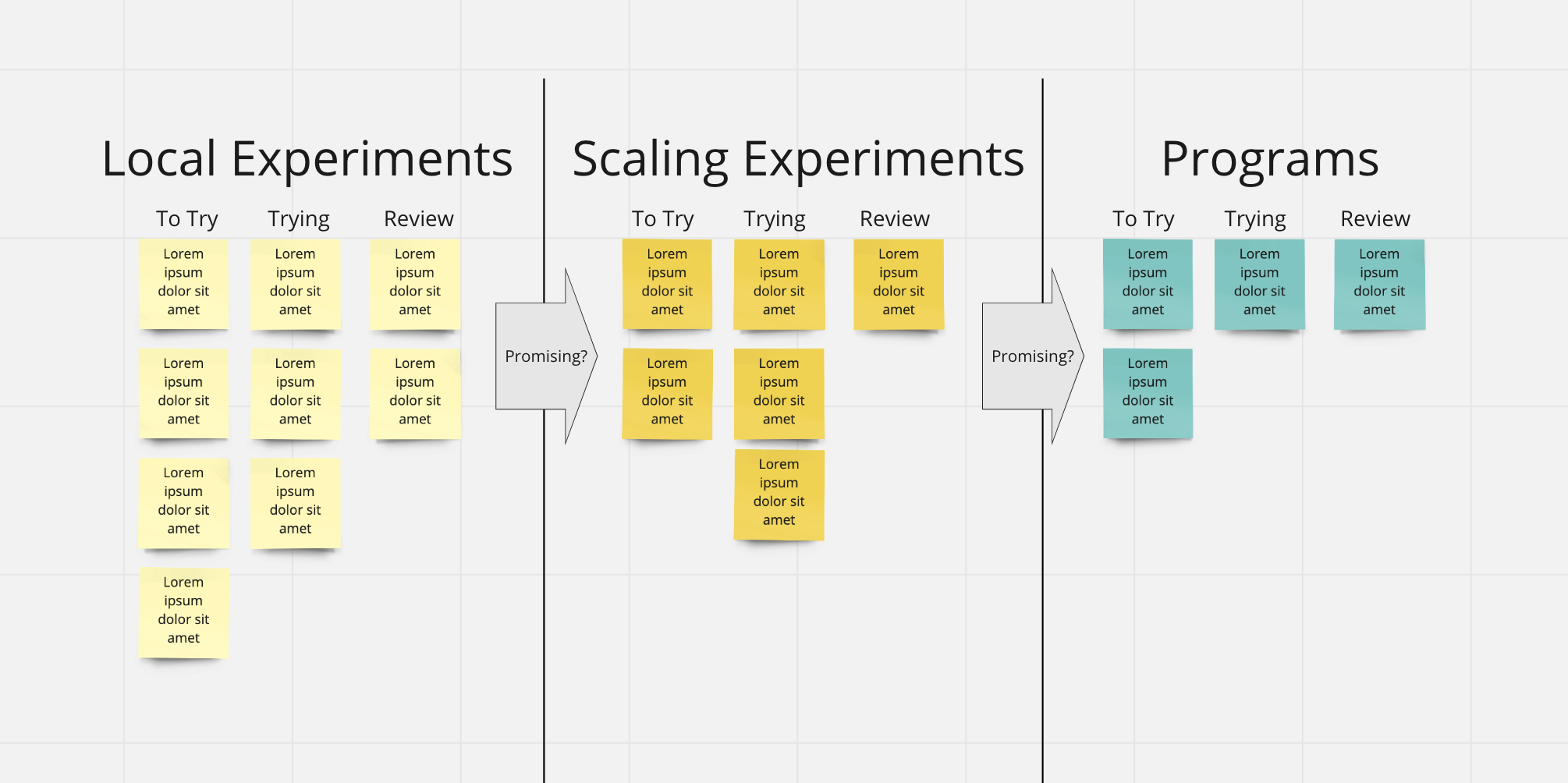

- Scaling Up and Out. Scaling up/out successful experiments requires collaborating with others, thinking about impact across teams, and “formalizing” the bet. Often, people complete an experiment on the small and jump immediately to scaling it up/out in isolation. The preferred approach is take what you learned, and then attempt to frame an integrated program around that. Not all successful MVPs are good candidates for scaling up/out.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 10/53: Hammers & Nails

The tools we know bias us to solve problems (and see problems) in particular ways. Which makes sense. We’re eager to help, and excited to help when we know how to help. However we all suffer, on some level, from putting our craft at the center of the universe.

I hear this all the time from technical founders. “My bias is to build! That is what I am trained to do! I get a lot of satisfaction when something breaks, or a customer brings up a problem, and I jump in and fix it with code!” Or from designer founders. “I spent way too much time trying to pick apart the whole experience. That is what I’m wired to do!” Or marketers. “I jump straight to go-to-market strategy!” Researchers. “We need a two-month study here!” The pattern is similar.

In the same organization you might find a dozen “if only”s. If only we had more time to focus on [research, DesignOps, architecture, UX, implementing Kanban, strategy, interviewing customers, looking at analytics, quality, etc.] everything would be better! Where X is something you’re good at, and where you see the flaws and imperfections, and opportunities.

Sometimes we inadvertently diminish others. In advocating for more time for design, designers cast developers as robot design assemblers working in the Agile-factory salt mines. When trying to advocate for a team that works together, developers describe designers as wanting to do “ivory tower design”.

I’ve observed, over the years, very well-meaning people slip into condescending and belittling language in an effort to advocate for (and defend) their craft.

I mentioned this on Twitter recently, and someone explained that product managers should somehow reign everyone in. That everyone is biased, and the product manager can magically be without bias and “do what is best for the product”. The issue here is that product managers also succumb to the “if all you have is a hammer” problem. They are not immune from bias.

Someone else mentioned “healthy tension”. That you NEED people to be somewhat myopic about their craft area, and you need them to battle it out. “The right way will be in the middle!” Maybe? Would that imply that those involved are at an equal level? Is the middle always right? How do keep the healthy in healthy tension?

Just knowing we will tend to see our craft at the center of the universe is a start. Ask yourself, “can I build better awareness of how the other disciplines can contribute? How can I use my craft to learn from others, and make their work shine? How can I view our roles as more overlapping?”

As a simple next step, maybe brainstorm 10 things that your area of expertise might bias you to do/think/say. And then write down how you might challenge your own biases. I’m not suggesting your instincts are wrong , rather that we tend to skew in one direction.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 11/53: The Know-It-All CEO

I hope everyone is safe and doing their best to keep their community safe.

My 83y/o mother, a lifetime smoker with chronic bronchitis, is trying not to leave her house. She's terrified, but loves chatting with her grandson via Skype every day. "When I was a child we survived the occupation hiding in our house, so I hope I survive now to see Julian in person again!”

I had to cancel this week's trip to Paris. La Conf' will happen in September . I have scheduled a free, virtual three-part lab series on March 19, April 2, and April 16 at Europe-friendly times. I'll record each session and make those recordings available to people who register. Click here to learn more about the series and sign-up . Invite coworkers. It'll be decent (I hope).

To all the teams struggling with being remote, I only have one bit of advice. There are a million people giving you tips. But the blocker is not good ideas. Advice is everywhere (especially from founders who have gone all-in on being remote). The actual limiter is your team's ability to inspect and adapt. I made a quick video called The Work That Makes the Work Work that talks about this. Give it a try.

And now back to our regular programming. Here's a thought experiment for you...

Say you work for a CEO who is a world-renowned expert in a specific domain. She has spent twenty years interacting with a specific customer/user persona. She knows it like the back of her hand. In four years she has grown a startup from 3 people in a bedroom, to a team of 150. Raised millions. Revenue is growing. Happy customers. She dictates the roadmap based on her conversations with customers. It's top down. And you know what? Often...her instincts are spot-on. The company is doing great.

But as the product becomes more complex, and customer personas multiply, she's losing her magic touch. She's not a designer, engineer, or product manager. The team grumbles about being "outcome focused" and "problem vs. solution" (as a proxy for saying "we're making bad decisions"). The CEO remembers how fast the team moved when it was 8 people in a room. UX and technical debt accumulates. Drag increases. Tension rises.

Common challenge. What do you do? How do you resolve this? How do you move forward? On one hand, you have this amazing CEO with tons of experience and knowledge. You want her to help. But her strengths have now also become a bit of a liability.

Here's something I have learned about this situation. There are two things going on: how decisions get made (who, when, how, etc) and the mechanism the organization has in place to reflect on decision quality.

A big mistake change-agents make is addressing these two things as if they are the same thing. It doesn't matter whether the CEO makes all the decisions or teams have 100% autonomy. In both cases you'll need a mechanism for describing your bets, and then reflecting on those bets. In both cases -- and in my experience few CEO's would disagree -- you need a way for people on the front-lines to trace their work to company strategy and The Why.

Back to our scenario with the CEO. Trying to wage a philosophical battle over who decides what is not your best option. Telling the CEO she isn't a designer, product manager, or engineer is not your best option. Both will inspire a threat response. A safer approach is advocating for a making bets visible , connecting the "tree" of bets , and doing decision reviews.

For two reasons 1) the best cure for the CEO-who-knows-everything is to look at outcomes, and 2) in the future, when you've pushed the decision-making authority down to the team-level, you'll STILL need this muscle.

Action Item: Decouple decision making approach from visualizing bets and reflecting on outcomes.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 12/53: Not BAU

In these hectic times, I am very hesitant to overload people with more content. We're all grappling with lots of difficult news.

So a brief post today.

Our work community can be a source of strength and support. Or it can be an added layer of anxiety on top of what we are all experiencing today. This is not business as usual. This is not "going remote". We are launching into uncharted waters. We are WFHDP (working from home during a pandemic).

Companies trying to figure out how to make people productive at home are missing the point. They are actually creating more process overhead, and more tension.

More than ever, it is important to limit promises in progress, change in progress, and work in progress. Work smaller. Leave a ton of slack in the system. Do way less, and slow way down. Limit your planning inventory. Work in smaller batches. Be more deliberate. More supportive. Sustainability and routine -- with minimal cognitive dissonance, and tension -- are key. Leave tons of bandwidth for care and community.

We had a wonderful celebration at work today. Moments of goofiness help. Julian loved all the backgrounds!

The news? We launched the redesign of the North Star Playbook along with a downloadable PDF . Did we have to cut scope? Absolutely. What I am most proud of is that the team put supporting each other first.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 13/53: Good Idea?

File this under "common sense, but you'd be amazed how few teams do this."

Before brainstorming solutions, organize a team activity to design a judging/ranking guide. How will you pick the "best" idea? Try to make the guide accessible. Avoid insider language. For example, "delighter", "fits the strategy", and "customer pain" don't tell us much. One trick is to stick to structures like:

- Probability that this will increase [some specific impact]

- Confidence range that this will increase [some specific impact]

- Degree of alignment with [specific strategic pillar]

- Likelihood this will help us learn more about [learning goal]

- Ability to approach incrementally vs. in one large batch?

Examples:

- Probability that this will increase average time-to-completion for the account setup workflow (from # to #)

- 90% confidence range that this will decrease support requests related to failed account merges (from #/week to #/week)

- Degree of alignment with our strategy to pass the rigor test with senior engineering leaders, who will then, in turn accelerate the sales cycle

- Likelihood this will help us learn more about the unbanked persona with regards to their approach to money transfers

The goal here is improve conversations, not manufacture certainty.

I've seen a bunch of prioritization spreadsheets over the years. 90% of them were more theater and faux rigor than substance. Add some weighting here. Whoops...that doesn't look right...that should be 4.2 not 4.1! How do settle these two items tied at 92.1??? Wait, the CEO wants us to do that one...should we add a column called CEO DESIRABILITY?

What was missing? Conversation. Tweaking. Challenging assumptions. Refining language. And capturing that language in something reusable. You will notice that the implicit strategy will emerge from these conversations. Don't be afraid to try more subjective criteria provided the conversations are product.

Various facilitation approaches work; I don't want to be too descriptive. Experiment with individual brainstorming, transitioning to teams of two, and then larger groups. Try a contrived, less contentious scenario. And resit the urge to put this in a spreadsheet. That's where the faux rigor starts.

You know you are on the right track when you can comfortably plug in multiple solutions and have a good conversation. If only one solution “fits”, you’ve made this too prescriptive.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 14/53: 1s and 3s

Imagine that at any given time there is work happening across a series of time-scales. I use a basic system of 1s and 3s. It looks like this:

- 1-3hrs

- 1-3days

- 1-3weeks

- 1-3months

- 1-3quarters

- 1-3years

- 1-3decades

Every 1-3 hour bet connects to a 1-3 day bet. Every 1-3 day bet connects to a 1-3 week bet and so on -- all the way up to 1-3 years and 1-3 decades. The ranges are broad enough not to get people hung up with estimates, but narrow enough to reason about. And they are all connected (very important).

Team member should be able to trace their 1-3 hour work chunks all the way up to the company's 1-3 year bets. Without skipping steps.

Rough, Contrived Example:

- 1-3h: Research how we've been storing transaction types

- 1-3d: Adding new transaction type autocomplete logic

- 1-3w: Less error-prone transaction classification

- 1-3m: Improve worfklow efficiency for bookkeepers (>15 person teams, high transaction volume, multi-currency)

- 1-3q: Addressing challenges of larger bookkeeping teams

- 1-3y: Expand sales opportunities with larger, multi-national orgs

We could layer in things like assumptions, facts, measurement, and more persona information. You can get way more detailed. A 1-3w bet will likely have many “sub” bets, etc. The key idea is to explore the story and connections.

It is also a handy slicing forcing function. You can typically get smaller AND bigger (important given that many teams are so focused on “the sprint” that they lose sight of the bigger picture).

In doing this exercise with many teams, I've come to see how often teams bump into a "messy middle" problem. Work in the near term is clear (people come into work and want something to do). Work in the long-term fits on a slide in an executive's deck. Turns out making slides is easy.

It is the messy middle bets/missions in the 1-3 month and 1-3 quarter range that are far less coherent.

Give it a try...

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 15/53: Why Can't This Be Like...

"Why can't this be like The Redesign?"

"What do you mean?"

"The Redesign was such an amazing project. It shipped on time. We won awards for it. Customers seemed to like it. Everyone seemed committed. And now, I don't know. We're not executing. There's no sense of urgency."

</scene>

Have you had a mythical past effort hung over your team's head? Or heroic stories from a past era — back when "things just got done "? The mysterious part of this, in my experience, is that people seem to have amnesia about what happened:

- The CEO cleared the decks so the team could focus.

- Everyone involved committed to a daily meeting and worked to remove blockers immediately.

- The team had direct access to customers. The team was able to chose its stack and tools.

- It was early in the company's history, and there was no shortage of "low hanging fruit" to address. The company was selling to a single, early-adopter persona.

- Work did just get done , but the team cut corners that they later had to address. The effort was not sustainable.

An example from my career.

A team split between two countries was the "problem team". There was lots of finger pointing and comparing. Why can't they get it together like Team X? I kept trying to defend the team by explaining the extenuating circumstances, but that made matters worse.

Luckily, things took a turn for the better. And I learned a lesson.

A new architect joined the company. First, he got everyone in the same room (before it was a mess of meetings between middle managers). They committed to resolve one issue a day during that meeting, even if it took a couple hours. Over the course of the next month they were able to get the initiative back on track.

I have no magic cure for this, but I have two thoughts (based on my experience):

- Once people have formed their narratives of past events, it can be very difficult to change that narrative. I'd argue that trying to do so is a losing proposition and actually makes the situation worse. They feel threatened. You get bitter.

- Instead, focus on supporting an environment where a new, compelling narrative can emerge. In the example above, the architect nudged the system. In a couple months, other teams were asking for details.

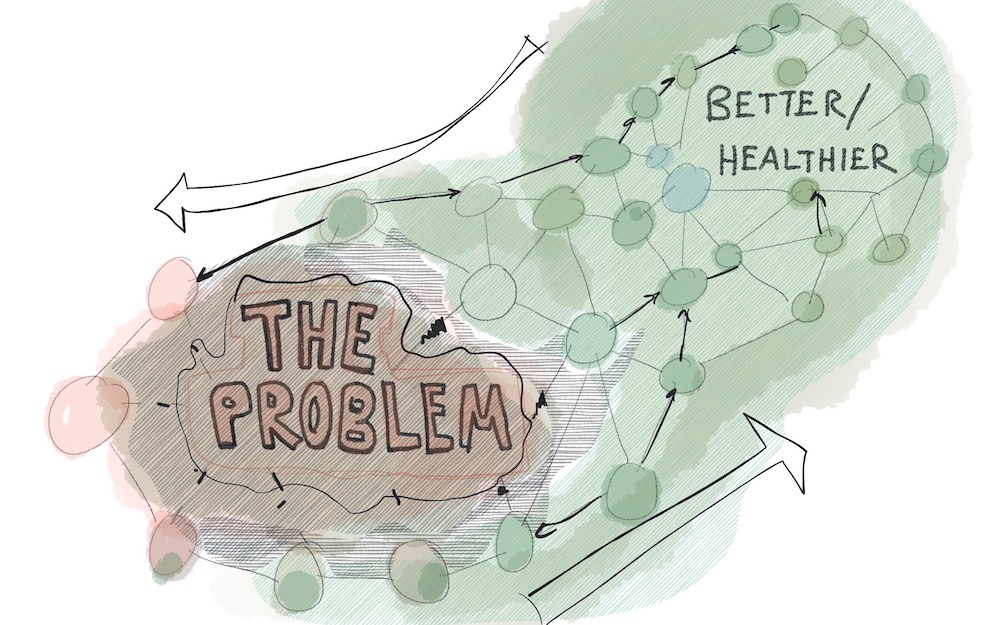

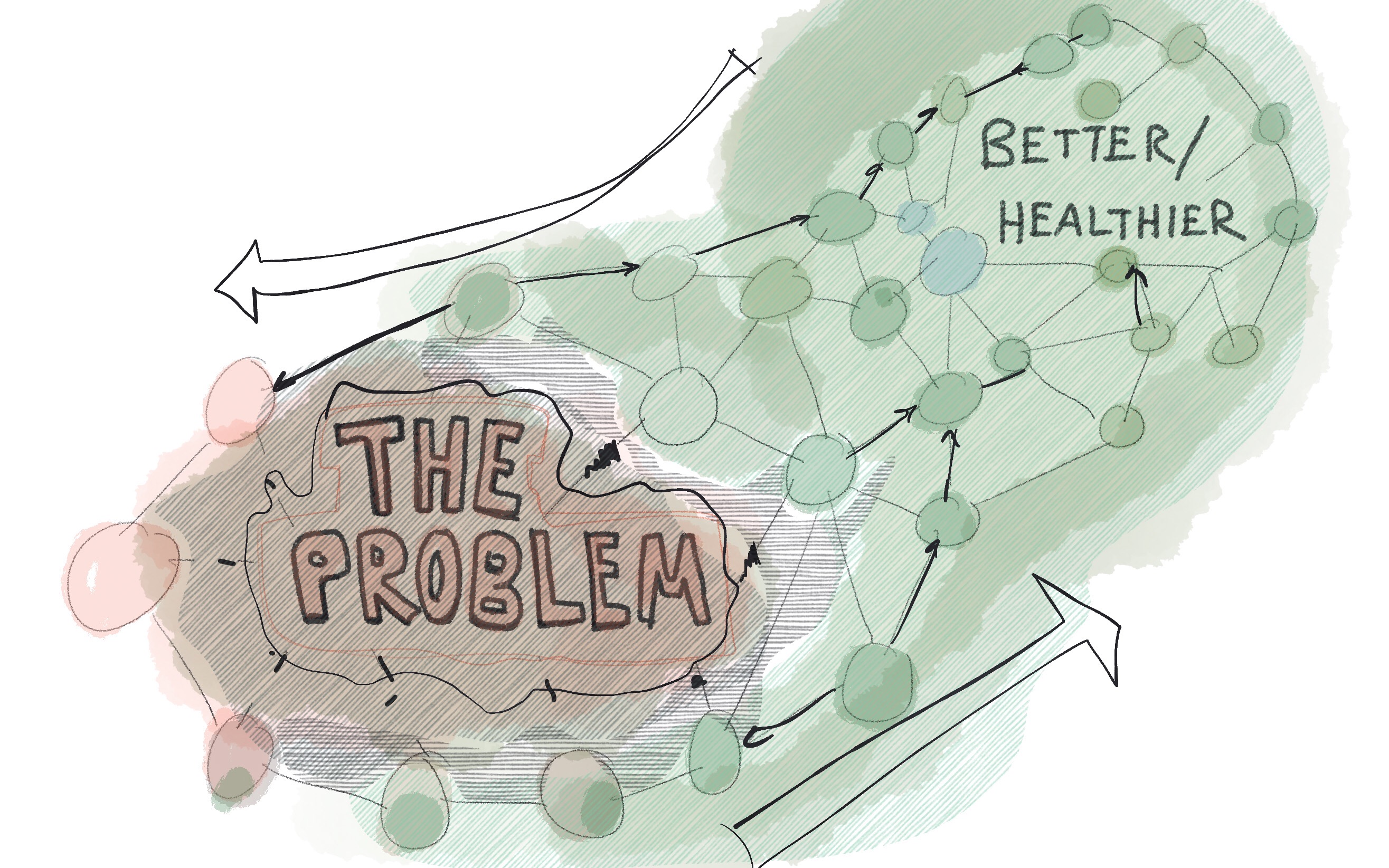

"Starve" the problem, and the tired old stories, by supporting something better and healthier. Along with your teammates, write a better story.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 16/53: The Boring Bits

Cleaning up meeting notes. Putting in the time to run a great activity. Sending a link to the one-pager folder two days in advance of the workshopping session. Doing the pre-read and taking good notes. Re-taping the physical kanban board to reflect the new working agreements. Running a meaningful offsite. Writing the weekly recap.

These are the boring bits that busy people often don't have time for. It's crazy lately! We've got to be nimble ! Who needs that bureaucratic bullshit ? "Individuals and interactions over processes and tools!"

The skepticism and reticence has merit. We've all experienced crappy management at some point. We've all endured process that oozed distrust and in no way benefitted customers and the team. Compliance by Jira. But there's a good deal of boring but necessary work needed to help a product team do meaningful work.

This work often goes under-appreciated. And in some cases ridiculed and diminished. But while tactics (and org culture) vary, most effective teams figure how how to make (some amount of) it happen.

A big challenge is that some things suck at first. Value emerges with practice. Take learning/decision reviews. Learning reviews are a bit nerve wracking when you start out. They are a lot harder than showing off a new feature. You have to unravel your process and decisions. The folks listening need to pay attention and work hard to ask good questions.

It is easy for a team to quit after a couple tries and get overwhelmed with a new, shiny project. Many teams treat the meta-work as something you do on top of the "real" work. There's no time. But if you keep at it -- take the leap of faith, and carve out the time and energy -- there's a good chance your team will benefit.

Another quick example: learning backlogs . Sure this makes sense. But it takes just a bit of extra work and focus. If you’re maxed out or too rushed (or chasing efficiency), you’ll drop the practice.

Final one: a coworker who used to dedicate one full our of prep PER ATTENDEE when it came to important meetings like kickoffs. To many that is a waste. But no joke…the return on investment was huge.

How do you build the trust of your team to take this leap and give it an honest shot before nixing the experiment?

- Commit the time required to make something a habit.

- Frame it as an experiment.

- Limit change in progress.

- Be willing to walk away if it doesn't work. Engage your team in detecting whether something doesn't work. Engage your team in designing the experiment.

- Be crystal clear when you expect a dip...a period of "this sucks".

- Lead by example. Don't be the first to bail. Do your part. Take the notes. Run the meeting. Write the follow ups.

This is on my mind lately as some very effective teams have shared their "process" or system with me. Of note is the best seem to strike this interesting mix between rigor/repetition and being flexible and adapting continuously. It isn't one or the other. They try things that don’t initially make sense or seem efficient. And quickly jettison those things after giving them a fair shot.

Andric Tham describes this nicely:

So, how do you go about building support for the boring bits? Let me know.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 17/53: Measuring to Learn vs. Measuring to Conform

Are you measuring to learn, or measuring to incentivize, justify, and manage? Both needs are valid in context, but teams (and frameworks and processes) often confuse the two.

Take a quarterly goal. Once a goal is set, consider what happens to the perspective of the team. A week in, do they challenge the validity of that goal? Do they pivot? Consider a team spending weeks and months converging on the perfect success metric. Great, you've defined success, but the reality is that the metric is a hypothesis. It encapsulates dozens of beliefs. Understanding success is a journey, not an end-point, and manufacturing the definition of success can set you back.

That doesn’t mean goals — and measuring to check and understand progress towards goals — aren’t effective. But it is important to be realistic about what we are hiring goals (to overburden jobs-to-be-done) to do.

I try to remind teams that if you're 100% certain about something, there's a risk you are in a commodity business. But how about A/B tests? "We need proof!" "We should apply scientific principles!" "Facts not hunches!" A/B and multivariate testing is appropriate in context, but not all contexts by any means. Truth be told, some companies known for their A/B testing acumen (though I’m sure they are printing money) offer crappy experiences and chase local optimums.

I say this as someone very passionate about product analytics, measurement, and data literacy. At Amplitude , our most effective customers use an array of approaches. The key: use the right approaches for the task at hand.

The same inertia pops up when the team needs 100% confidence before pursuing a strategy. Someone wants PROOF. Data as a trust proxy. When you dig and ask about risk-taking in general, you find a classic juxtaposition. There's a tension. The org empowers some people to take risks -- "big, bold risks!" -- and requires other people to provide PROOF "so what is the value of design, really?" There's a veneer of rationale decision making, which would incorporate uncertainty, acknowledge priors and encourage a portfolio of bet types.

Being data-informed and data literate (both qualitative and quantitative data literacy) is itself a learning journey. It is iterative. You ask questions and refine those questions. You figure out you are measuring the wrong thing. You refine. You nudge uncertainty down, and then hit a false peak. "Oh no, that turns our mental model on its head!"

The action item...chat about the differences between measuring to learn and measuring to incentivize, justify, and manage. Are you making the distinction clear?

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 18/53: Blank Slates, Seedlings, Freight Trains, and Gardening

Here is a simple activity you can do with your team. I’ve tried it about a dozen times now with good results.

The basic idea is to use common (but salient) objects, activities, and ideas to spark a conversation. I have given a couple examples below, but you could easily come up with your own. Importantly, don’t pick things that are only positive, or only negative.

For each item, I have provided some example tensions and alternative explanations. I have also given some question examples. Over time you can evolve your own. If you are feeling adventurous, bring pictures and/or draw the objects.

Blank Slates

Starting over. The blank page.

- A chance to "start over knowing what we know now"

- Feel exhilarating and fun, and intimidating and disconcerting

- Involve loss. Involve leaving behind the "old thing"

- Sometimes involve leaving people and places behind

- An opportunity to work in new, unconstrained ways

- Could involve discounting prior work. Not honoring the past

- Easier than working with the good in the status quo

Where do we feel the urge to start over? Why?

Where have we started over recently? Why?

What did it feel like to start over?

Walls

Support and divide.

- Space to focus. Prevent distractions

- Contribute to structural integrity. Safety

- Some feel naturally placed. Some feel awkward, artificial

- Can emerge through repeat passage and erosion

- Restrict flow of information, access

- Might need "doors and windows"

Where do walls divide us, but are necessary?

Where have walls just appeared?

Which walls should we strengthen? Bring down?

What keeps certain walls in place?

Examples of things that are walls to some, and doors to others?

Seedlings

Protect and nurture.

- Need protection. Fragile. Need water

- Will hopefully grow into something bigger and stronger

- Not all will thrive. Need to pick and chose

- Doesn't "pay off" immediately. Is not fruit/flower-bearing yet

What efforts must we protect?

Are we doing a good job of protecting those things? Do they get a chance?

Do we have enough seedlings planted?

How do we balance caring, and moving on?

When does a seedling graduate ?

Freight Trains

Not if, but when. But why?

- Have lots of inertia. Heavy. Lots of momentum

- Capable of scale and “moving stuff”

- Run "on rails". Travel predetermined routes

- Repetitive, known approaches, efficient, transactional

- We may not question why the exist. Run "just because"

What are our freight trains?

Where do they serve us?

Where do they run without us questioning their value?

Gardening

Weeding and pruning.

- Required for sustainable growth and health

- Easy to overlook and get reprioritized

- Easy to get obsessed with gardening. Don't want to "over-garden"

- Less flashy. Improvements are less visible

- Makes other things possible

Where must we do more gardening?

How can we do the right amount of gardening?

What does gardening make possible?

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 19/53: Drivers, Constraints, and Floats

I wanted to share an amazingly useful idea I got from Johanna Rothman and her book Manage It! (2007) .

Initiatives have Drivers, Constraints, and Floats. Drivers “drive” the effort. Constraints constrain. And floats are levers that you are free to move.

The basic idea is to minimize the number of drivers, minimize the number of constraints, and increase the number of floats. You maybe be familiar with the Project Management Triangle and the saying "Good, fast, cheap. Choose two." I prefer Drivers, Constraints, and Floats for product work because our work may be driven by many things. We are exploring viability, opportunity, and value instead of getting paid COD by delivering a project.

Let’s look at a non-software example. A trip!

>7d, <14d. With the kid. Outdoor stuff. Some culture. Before he's 3. <$200 a day. Naps at 1pm. Peanut allergy. See Jane in London. Use miles. Time to relax.

If you’ve traveled with kids, you’ll know that this type of planning feels like three-dimensional chess. Something has to give. What are you optimizing for? Relaxation? Which constraints must you navigate? Naps at 1pm? Where are you flexible? Can Jane fly to Amsterdam?

Common sense, right? Yet with product work we often load up on drivers and constraints. We want to keep two personas happy. Has to work on mobile. Coordinate with the data engineering team. Grow X while growing Y. What about the global re-design? Oh, let’s show it off at the conference. Why not? While we are at it let’s [some thing]!

In the book, efforts with >2 drivers, >2 constraints, <2 floats are almost guaranteed to fail. Once you internalize that idea, there are three key challenges:

- Knowing when a driver, constraint, or float actually exists!

- Knowing when certain constraints will actually matter. For example, you need some marketing experience to know what “showing it off at the conference” will actually entail.

- Knowing how to narrow drivers. It takes experience to understand how subtle differences can dictate whole new approaches and how seemingly related drivers can send you in circles.

The idea itself is “simple”. Application is much harder and contextual. You need a radar for it. You need to catch yourself and others trying to "thread the needle" and play three-dimensional chess. The driver(s) needs to be insanely (almost comically) focused.

For your current effort, what are the drivers, constraints, and floats? Can you narrow and sharpen your drivers, reduce constraints, and find new floats?

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 20/52: Questions and Context

My friend is a chef.

She has two modes: the don't worry, it will be great mode, and the ask a million questions mode. Either way, the food is amazing.

I will always remember when she helped us with a special party and asked us a million questions. What fascinated me was that she didn't ask things like "ok, do you want chicken or beef?" She asked us for stories about memorable meals, our families, and our guests. She asked about guest allergies (of course), but also asked about favorite wines, and music that captured the mood.

She took all that information and put together an amazing, almost poetic, meal. Which shouldn't surprise anyone. My friend is a chef! That's what she's great at!

Back to product and the beautiful mess. An experienced architect was grilling the product team on their product strategy. He was getting frustrated. A product manager Slacked me something like "He doesn't trust us at all, does he?" I tried to stay curious. It turned out that the architect's questions were coming from a very good place. He was contemplating a difficult-to-reverse system design decision. To make that decision he needed non-obvious information about the product strategy (even if the answer was "we don't know").

Once I knew the why of HIS questions, I was able to give him the information we had (and detail what we didn't have).

I don't regard these two examples as all that different. In both cases, you had an expert asking questions so that they could do their job. The difference was that we were good friends with the chef. We trusted her and her questions. Meanwhile, the architect was in conflict with the product team, and didn't explain his line of thinking.

It occurred to me recently that so many challenges in product development spring from what Amy Edmonson refers to as professional culture clash . An executive saying "build this" may not have the background to understand the product development nuances. It is easy to assume the worst, but they are trying to help by telling you exactly what the customer wants! The trouble is that when a designer asks meaningful questions to get the information they need to do a good job...it starts sounding SO COMPLICATED.

Until you work side-by-side people and hear them talk in depth about their work, it is very hard to understand all of this nuance and very easy to end up communicating poorly. Some construe this as mistrust — “they should respect me to do great work as a designer, and I shouldn’t have to prove it” — but I am not sure it is really like that. In many cases, it is just confusing.

Here's something you can try immediately to explore this dynamic.

Pose this question to your team:

What must you eventually know about this work to make good decisions at the right cadence?

Whenever I ask this question, I am blown away by the context people need to make great decisions. It helps break down walls. You start to understand their calculus. This post is actually related to my last post on drivers, constraints, and floats . As we gain experience, we are able to elicit more meaningful drivers.

The next thing you can try is to start with the Why of your questions. What decision will your question inform? This seems so simple, but people forget to do this all the time.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 21/53: "Vision" and Prescriptive Roadmaps

I was chatting with a friend recently about product vision . They were fresh out of a meeting with their company's product management team. In that meeting, the product team had brought down the house. Applause! Questions! Excitement! According to my friend, the product team had redeemed themselves after a year of doubt. The presentation was visionary .

This sounded great (almost Steve Jobs-esque), so I dug in. What did they do? How did they get everyone excited?

It turns out the product managers went into full sell mode. Animated gifs of yet-to-be-built features. Stories. Promises. It was a full-on pitch fest. "Before this presentation, we never knew what they were doing," explained my friend who works in sales enablement. "I wasn't excited or motivated by their work. They sort of seemed like they were slacking." Now the product team was putting a stake in the ground!

Hearing my friend explain this brought a point home for me. For lots of people—especially folks who haven’t been involved in product work—having product vision means knowing exactly what you are going to build. Not knowing what to build is a sign of weakness. Knowing what to build and "telling the future" is a sign of confidence. Features Are Real. And something else…optics do matter when it comes to establishing and maintaining trust.

Picture yourself as a salesperson working to hit a quota quarter after quarter. Under the gun and in the trenches. Deals coming down the wire. In waltzes a product team talking about experiments, outcomes, missions, discovery, and abstract non-$ metrics. WTF? Does that feel fair? Where is the vision and commitment ?

Here's the uncomfortable reality. In 90% of teams (especially in B2B), no one ever gets fired in the near/mid-term if they deliver a roadmap that everyone is bought into. This is one reason why feature factories are so prevalent. Once you've done the roadshow, everyone has a stake in—and a bias towards—those solutions. Even if the outcomes are mediocre. On some level, a mediocre outcome from shipping what you said you will ship (fast, of course), is better than a better outcome that no one understands.

In my work, I meet teams that are doing great outcome-focused work, but are terrible at "selling" those outcomes. They don't do whiz-bang prescriptive roadmaps, so they don't benefit from the perception of "seeing the future". They also don't take the time to really align the organization on what they actually choose to release, and don't circulate meaningful outcomes. When they hit a snag, it is back to square one and leadership overcorrects back to the feature factory. And a wicked cycle ensues.

You can't just decide one day to shift to an outcome-oriented roadmap and expect the rest of the org to fall in line. You have to contend with the fact that the people you work with likely view your job as seeing the future and knowing what to build. They actually admire product people for being able to do that.

So...if you plan to take another approach, you are going to need to fill that void. You are going to need to spin a new narrative across the team. You will need outcomes that matter to everyone, not just to the product team. You will need real results , and then you will need to shout those results from the rooftops.

I'll end with one of my favorite quotes from a salesperson:

I used to sell the roadmap. But the team started to do amazing work. On my calls, I could point to their amazing work over the last 12 months. The team consistently blew it out of the water. I sold our ability to innovate and I sold real ROI and outcomes, not future features.

That’s what you are looking for, and it isn’t easy.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 22/53: Attacking X vs. Starting Y

During a Q&A session today, someone asked me about success theater (a term I use often). "How do you beat it? How do we stop the Success Theater?"

Success theater is one of those phrases that triggers an immediate, negative response. It sounds disingenuous, insulting, and frustrating. "It’s all optics and smoke and mirrors, and the experience sucks."

In the past, I would list all the things you should stop doing. I'd rile people up. Success theater sucks, right? We need to tell the unfiltered truth! But I've come to understand things differently in 2020.

The way to "beat" success theater is not to battle it head on. Instead, you need to plant and grow a better narrative. A genuine alternative. New connections and roots will form, and the better story (or stories) will steal the oxygen and energy from the old way.

Success theater provides a sense of momentum, celebration, motivation, recognition, and closure (albeit in a shallow way, and not for the whole team). If you provide a new narrative that meets those needs but in a deeper, more meaningful, and more effective way, people will embrace the new way.

Change works like this often. We go where we look. If we spend all of our team getting angry about X, we'll naturally crash into X and get stuck on X. So maybe the thought for this week: Are you getting dragged down by what you are trying to stop?

Do you see opportunities to focus on a better/healthier narrative?

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 23/53: Privilege & Credibility

I want to share a brief conversation I had this week with a coworker.

We were talking about the best way to pitch a new initiative. I prefer to start with an opportunity, or an agreement to explore an opportunity. She shared that as a woman of color in tech she was often "pushed" to pitch solutions to gain credibility (even if she preferred an opportunity first approach).

I paused. I thought back to all the times I have advocated for teams to start together and delay converging on a solution. At no point did I even consider that some people have to be solution forward. That here I was, a white man, opining about the benefits of starting together , but I wasn't seeing the whole picture. I remembered a couple times I responded negatively to a woman going in guns blazing with her solution ideas. Why didn’t she start with the problem instead? Well…

And that's all I have this week. I have been thinking about it ever since. It was great feedback because I have always seen starting together as being inclusive. When you start together, you get to explore the problem and converge on a solution together. Everyone is invited. Or at least that is how I rationalize it.

But is everyone invited? And is the credibility to work that way evenly distributed?

Addressing that is weighing heavily on my mind this week.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

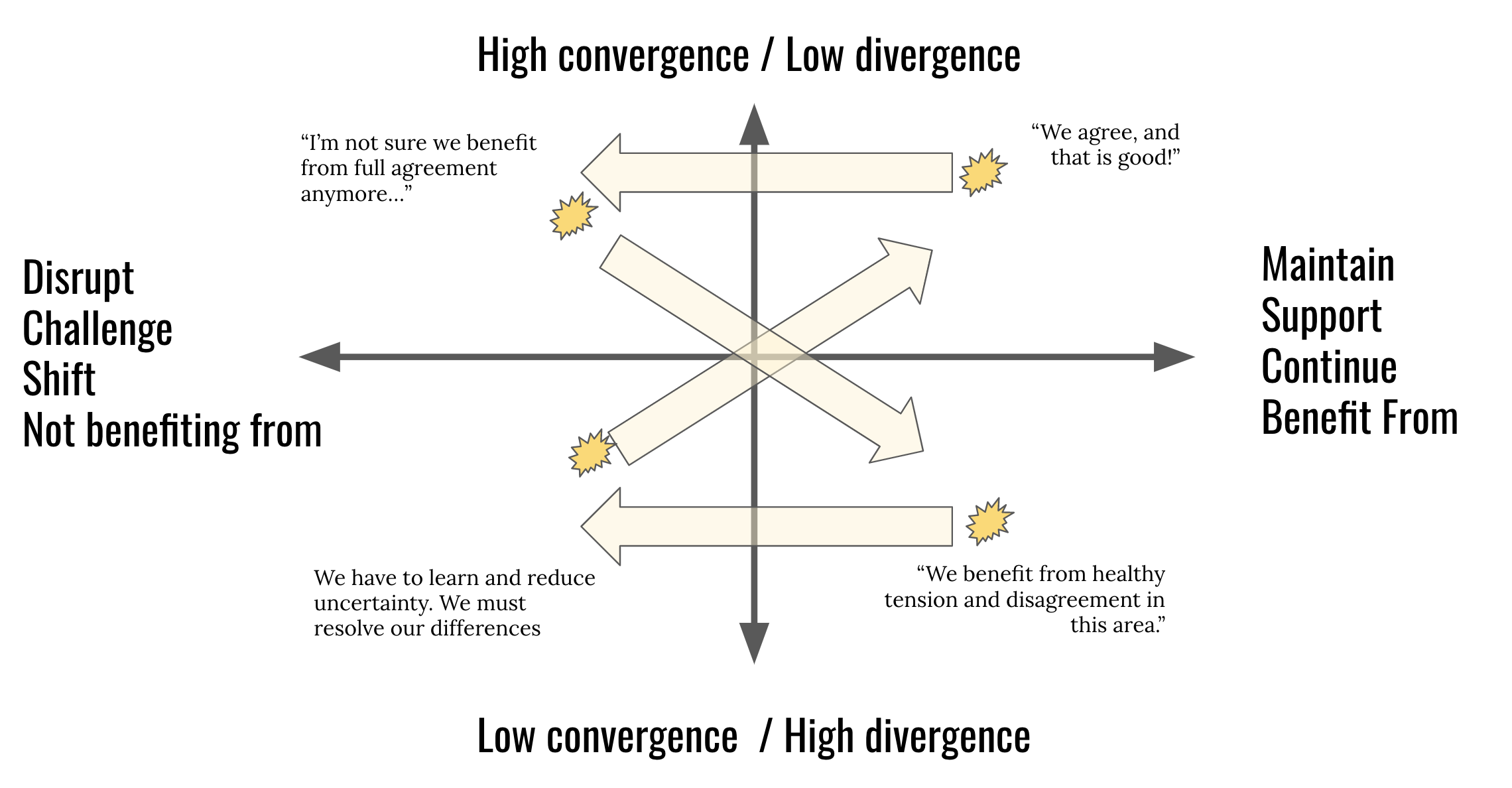

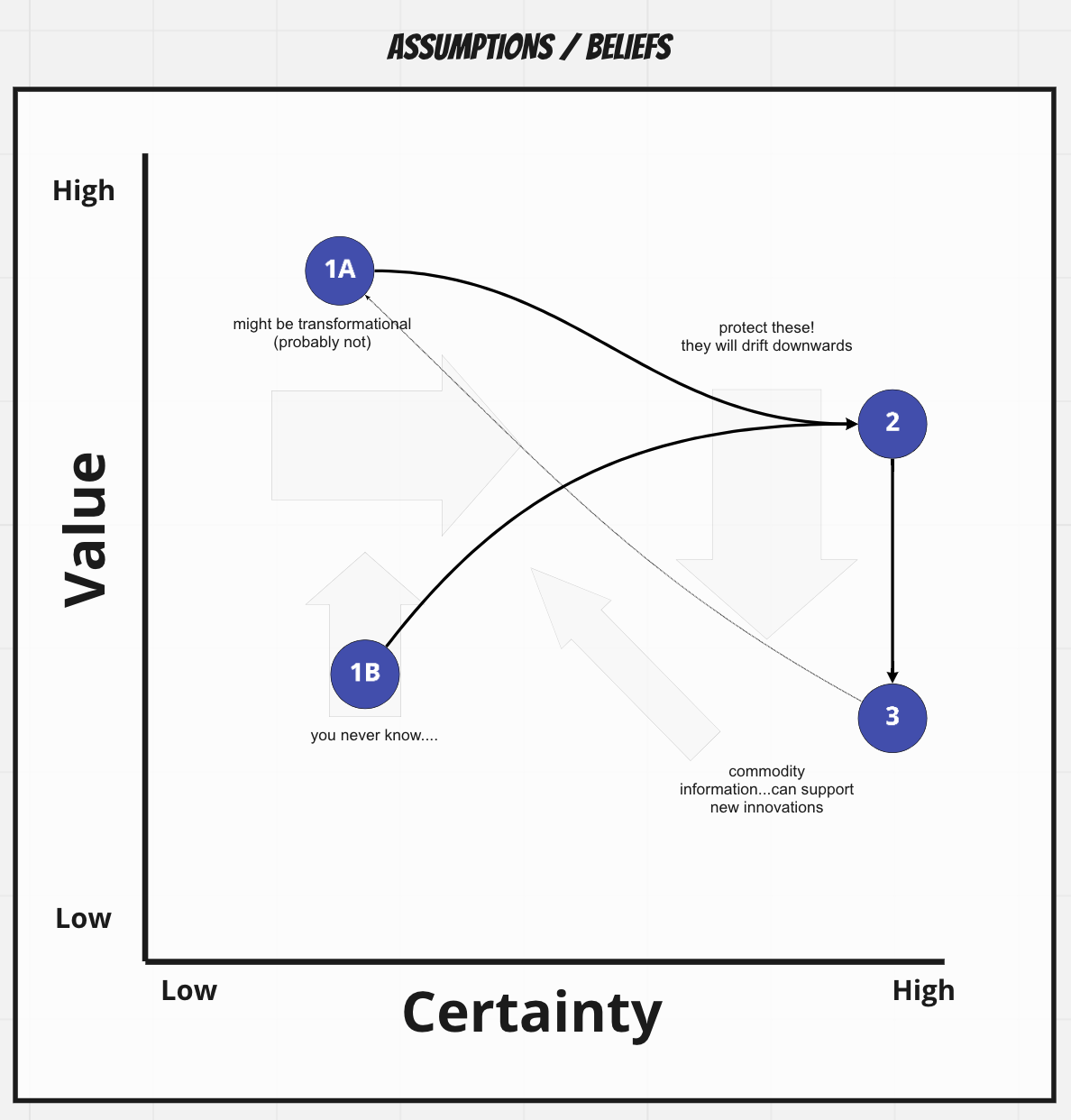

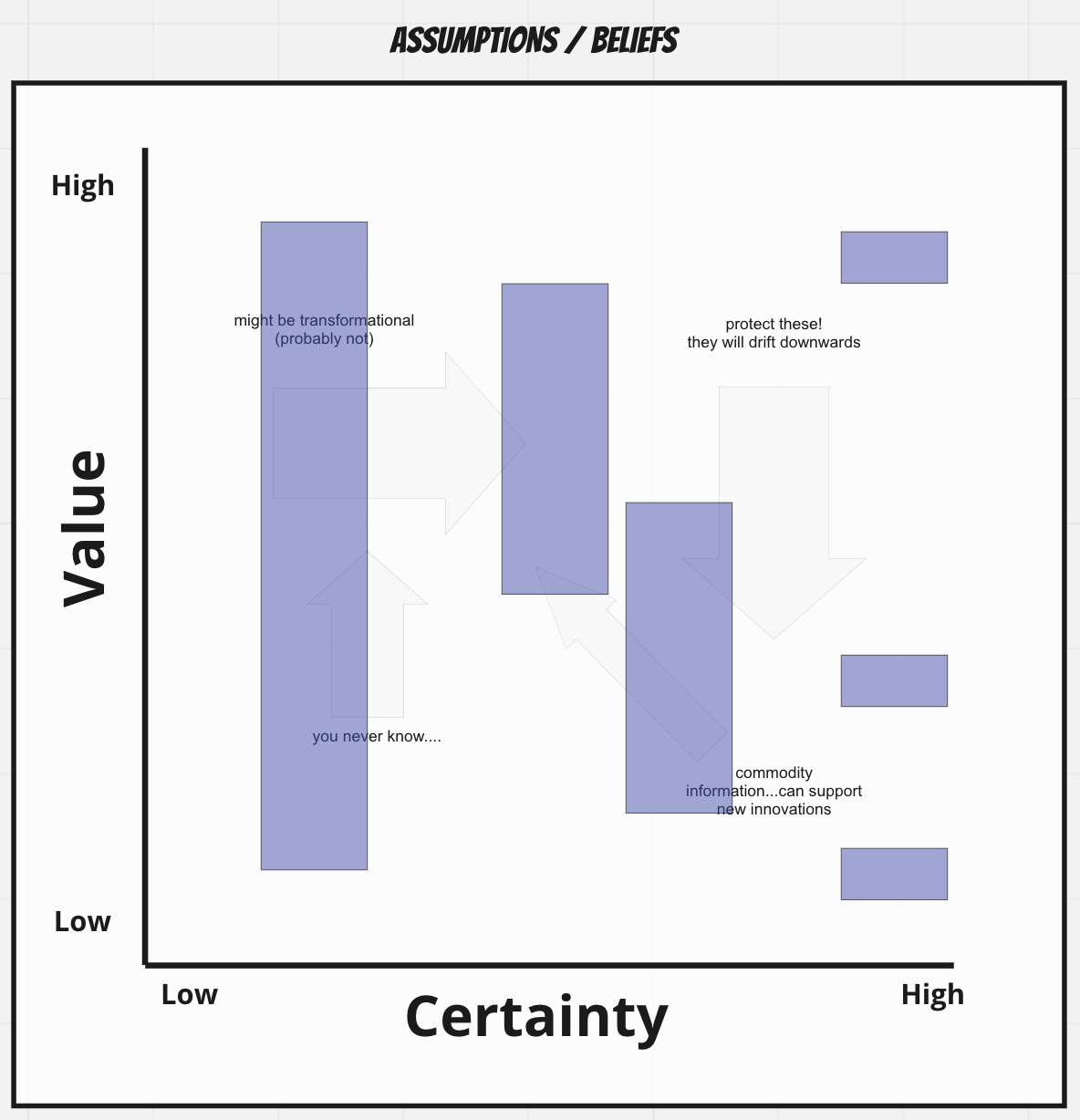

TBM 24/53: Mapping Beliefs (and Agreeing to Disagree)

I've been using this simple idea to inspire helpful conversations.

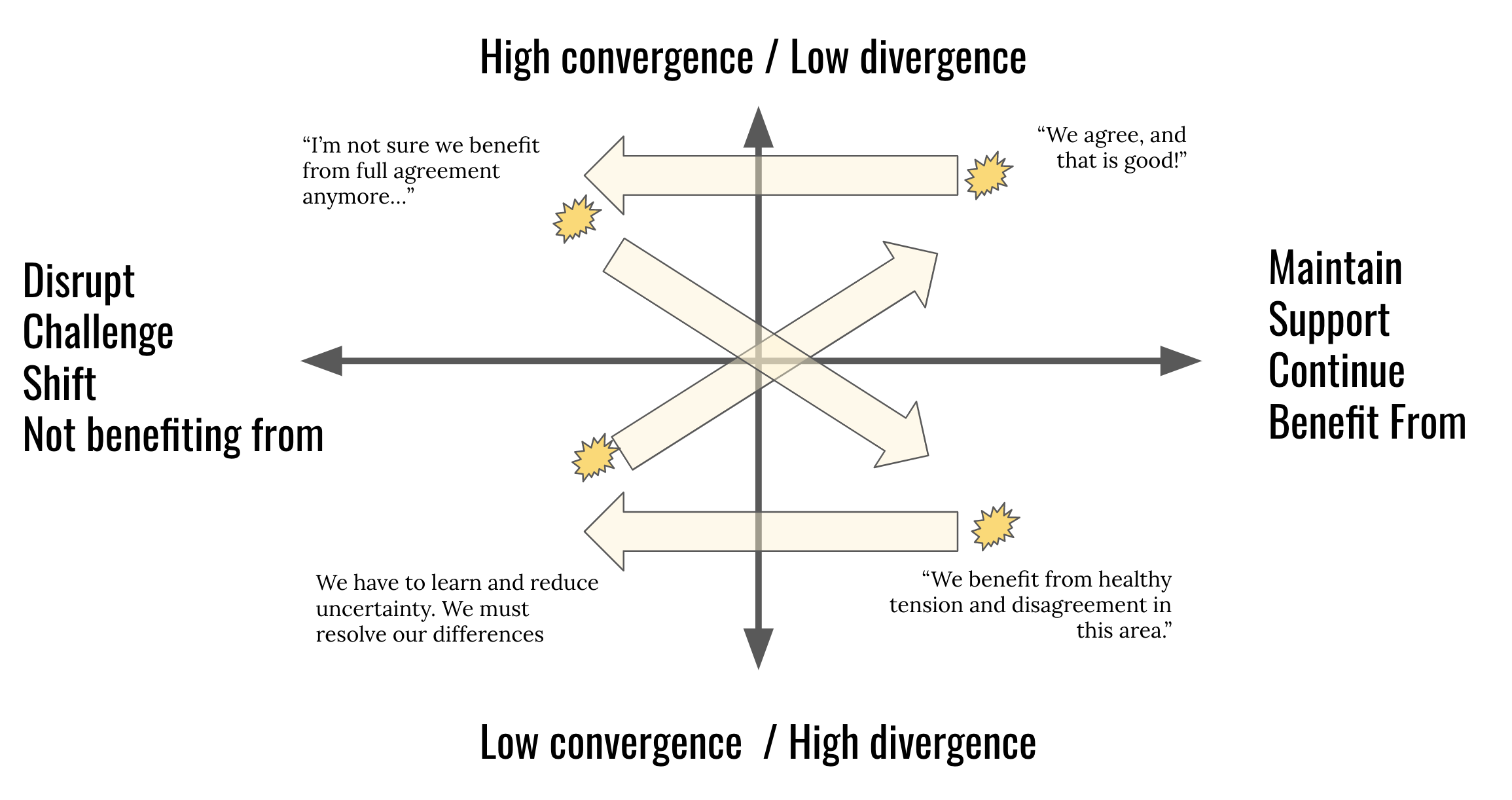

What is going on?

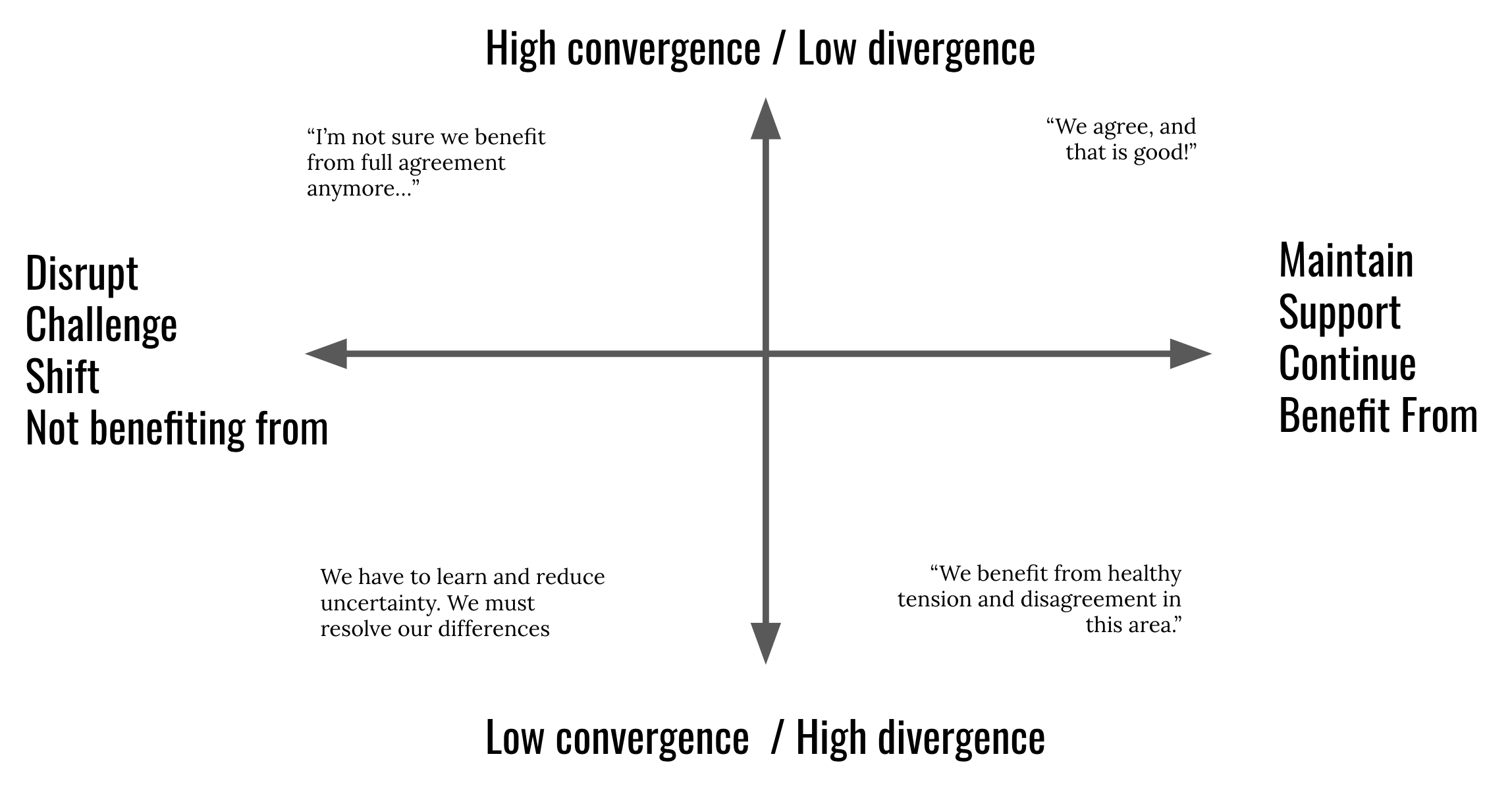

The y-axis describes the level of convergence around the belief. The x-axis describes whether that convergence is beneficial (right) or detrimental (left). Placing beliefs on the matrix is very subjective and that is the point.

Example beliefs (and placement). A team...

- Has diverse perspectives on how to evolve the product strategy. The tension is beneficial. Trying to converge now will silence important ideas. Lower Right

- Believes in the importance of diversity and inclusion, and must nurture and support that convergence. Upper Right

- Has a track record of careful estimation and planning (believes it is helpful). Leadership rewards "hitting your dates". But now the team -- or at least part of the team -- is wondering whether this might be hurting outcomes. Upper Left

- Has been struggling to settle a debate between two key executives for months. It is time to agree (shift to Upper Right ) or agree to disagree (shift to Lower Right ).

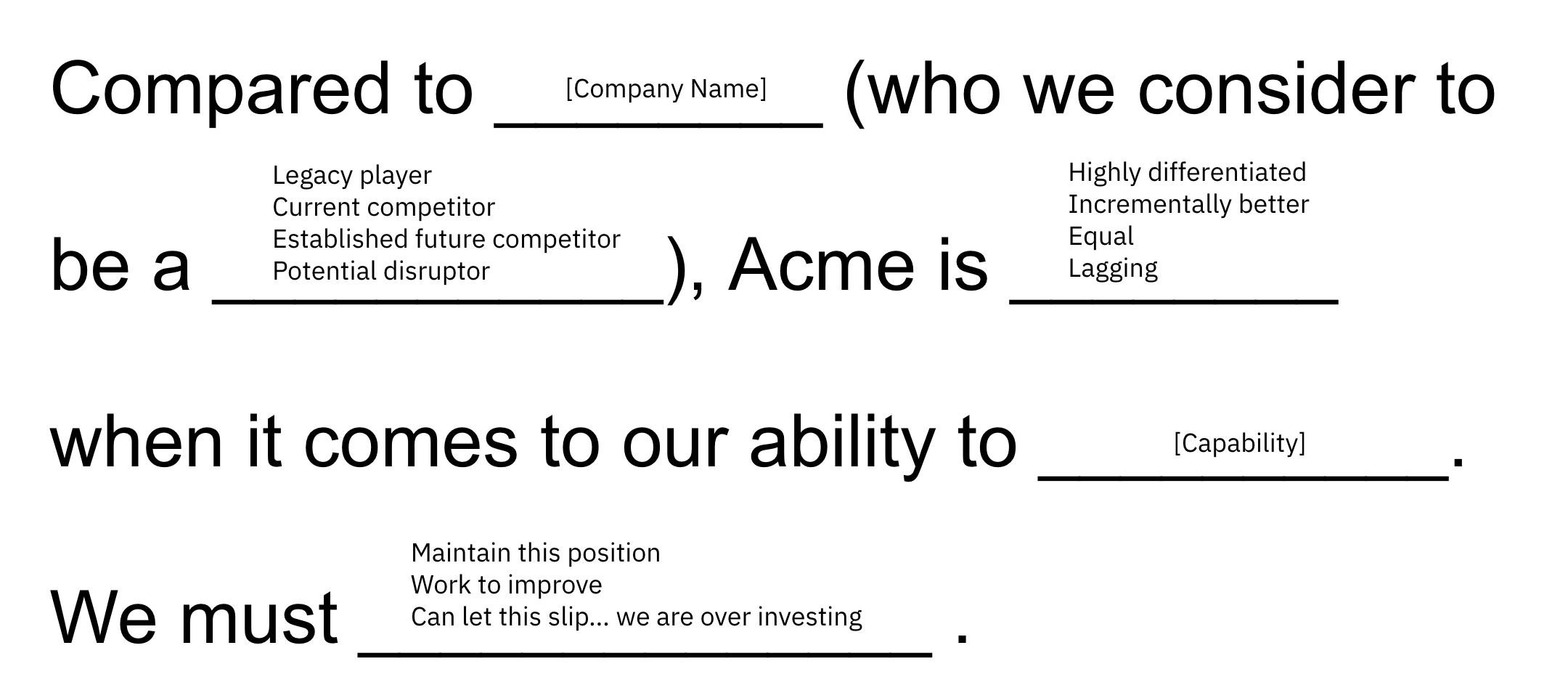

If you are having trouble eliciting believes, you might consider starting with this simple prompt:

Try silent brainstorming. You’ll be surprised by the variety of responses. And you’ll be surprised that many of the areas of divergence don’t actually matter at the moment…thought they may in the future.

What I like about this model is that we tend to talk about agreement as if it is generally a good thing. Same with alignment. But often we need to agree to disagree. Or agree that our agreement is no longer valuable. Or disagree about the degree to which we are supporting areas of agreement.

There’s also an interesting dynamic at play:

Areas of agreement, over time, may become stale. Once you agree to challenge that convergence, the team is thrust into a period of uncertainty. But the uncertainty/divergence is valuable. At a certain point, you realize that convergence is necessary. Which ushers in the move to convergence and supporting an area of agreement. A company tends to have just a handful of ideas/beliefs that remain in the upper right.

Give this a try. To bootstrap the activity, make some notes about frequently communicated beliefs. Ask the team to place those beliefs on the matrix.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 25/53: Persistent Models vs. Point-In-Time Goals

I've done a bunch North Star Framework workshops over the last year. Lately I've noticed something important.

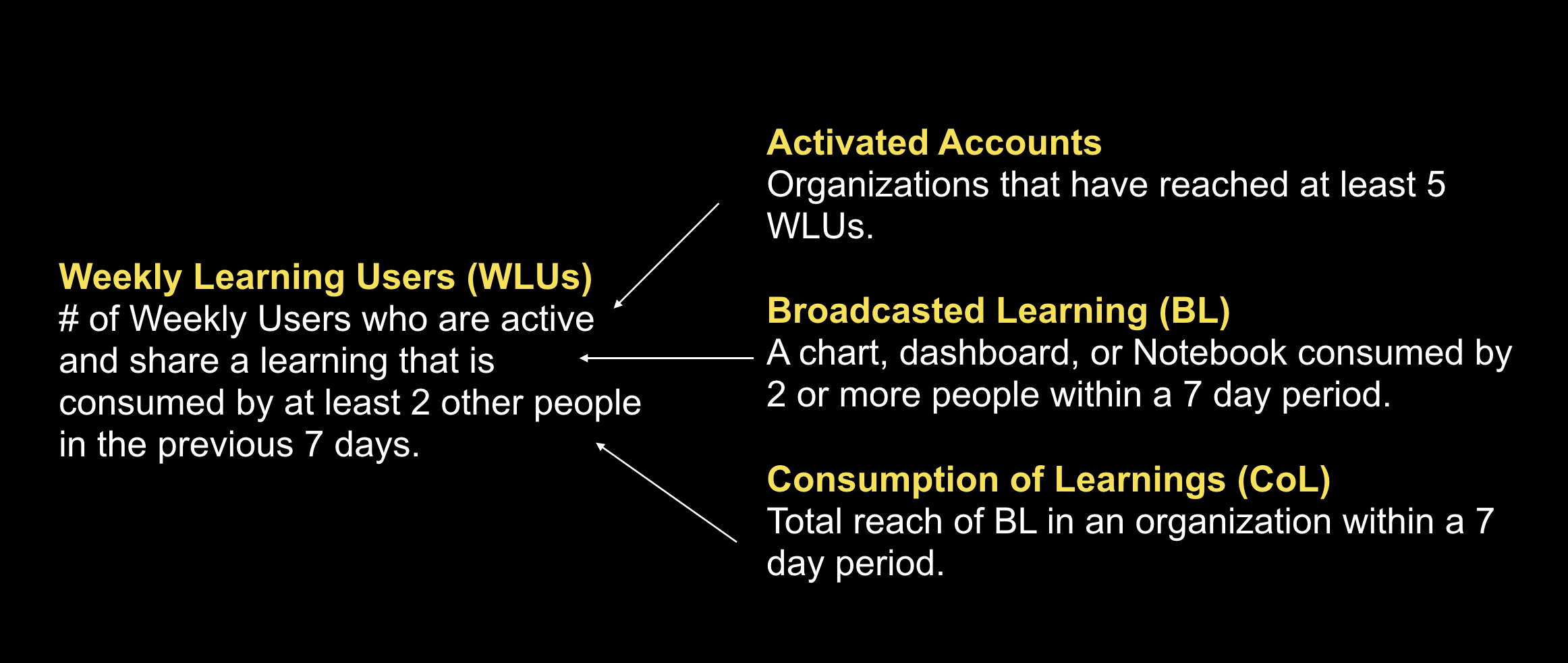

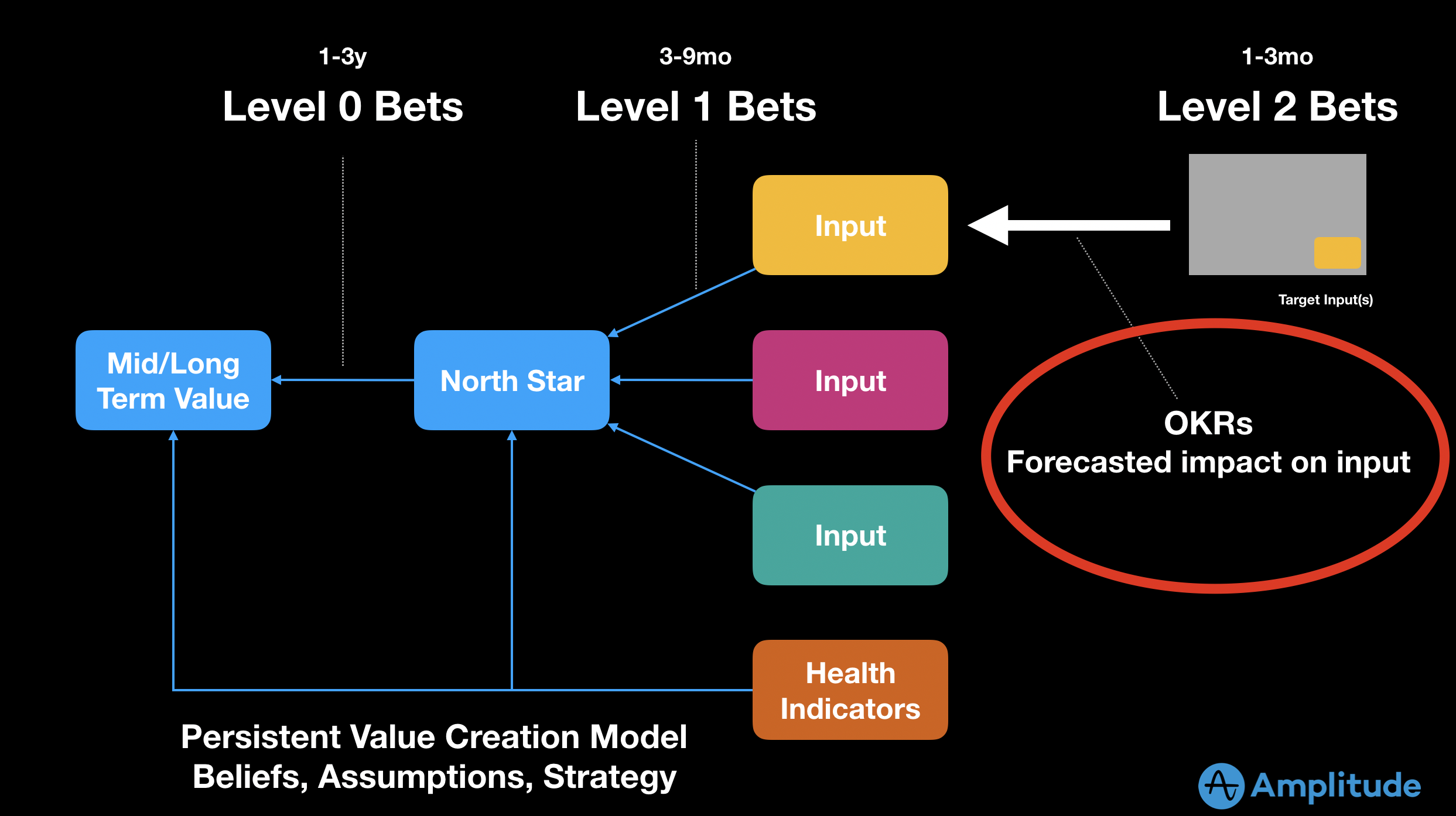

There is a big difference between persistent models and work (or goal) related models. OKRs, for example, are a work related model. Work related models involve a specific time-span (e.g. a quarter). The team attempts to achieve The Goal by end-of-quarter.

Meanwhile, a north star metric and related inputs persist for as long as the strategy holds (often 1-3 years ) . The constellation of metrics serves as a belief map, driver diagram, or causal relationship diagram. It explains our mental model for how value is created and/or preserved in our product/system.

Here is Amplitude’s north star metric and inputs. Note how this is likely to remain steady for some period of time. There’s no “work” implied.

The two approaches are complimentary. For example, a team attempting to influence broadcasted learnings might forecast how the current “work” will impact the rate at which people consume dashboards, notebooks, charts, or dashboards by EOQ.

But here is the important part...

Without persistent models, teams are always chasing their tails. Quarterly OKRs should not feel like a "big deal". But they are exactly that when either 1) teams have to dream up their OKRs from complete scratch without a persistent model to guide them, or 2) OKRs are a cascaded down and teams lack context.

So in my coaching, I have started to spend a lot more time with teams on exploring persistent models, and a lot less time on initiative goal setting. Why? Once you have the foundation set, initiative goals are a lot easier and intuitive (and safer, and more effective).

In your work, how do you balance the use of both types of models?

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 26/53: Kryptonite and Curiosity

When you hear...

We just need to execute

or...

We just need to right people in the right roles

or...

Bring solutions not problems

or...

When everyone is responsible, no one is responsible

or...

That is a low maturity team

...do you find yourself nodding or cringing? Why?

These phrases are my kryptonite . What are yours?

I’ve started a crowdsourced Twitter thread to capture some .

I cringe for various reasons. Some reasons predate my professional career. Some reasons involve my own views of work, teamwork, and leadership. And some reasons involve work experiences that left me hurt, angry, and frustrated.

Yet I have respected peers who use those phrases without a moment's hesitation. In front of hundreds of people even! People whose work and leadership I admire. I still cringe, but lately I've tried to become more curious.

What you start to notice is how perspectives on work differ. People have differing views on accountability, efficacy, leadership, and how work actually happens. Obvious, right? Yet many people — sometimes me, I'll be honest — don't pause to think about how their words translate. And don't pause to give people the benefit of the doubt.

Some people aren't even aware that different styles exist, or that different styles can be viable. Example:

Why would you ever need to configure Jira to have multiple assignees? That just doesn't make sense. The thing would never get done!

This is especially true in places like Silicon Valley where people have experienced notable successes. Success often breeds myopia.

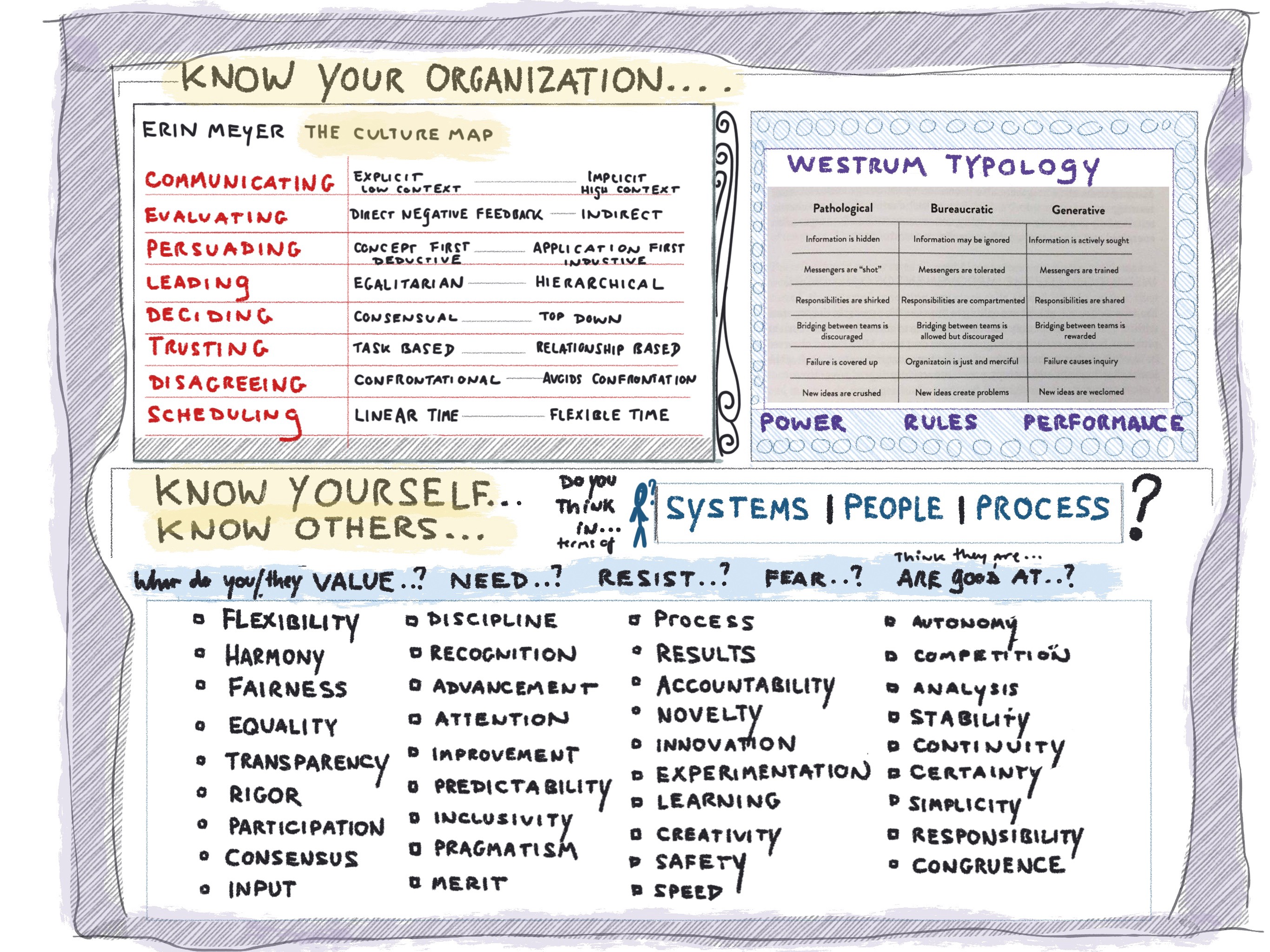

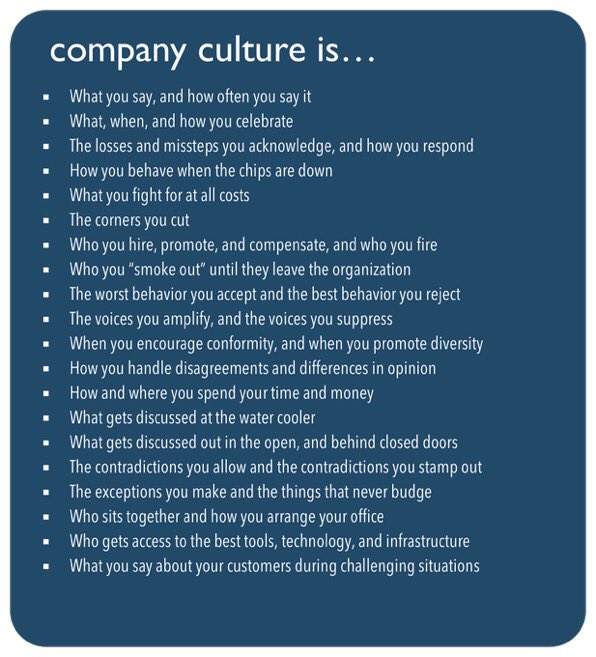

A couple years ago I put together

this list

:

The idea was to encourage people to consider their work culture -- to step back and observe, before jumping in to a change initiative. This is vital if you want to embrace the beautiful mess and be an effective teammate.

I think I'm making three points here:

- Consider how your own words come across. When in doubt, use more specific language with less baggage. Don't know phrases that have baggage? Ask your team. Invite feedback. This is especially important for leaders. One misstep to a large audience could take weeks to repair.

- Accept there are many different views of work. Explore these differences with your teammates. Get this out in the open.

- Come to grips with your own "kryptonite", and reflect on whether your knee-jerk response is helping or hurting. Try to stay curious.

Back to Table of Contents | Found this helpful? Leave a tip... | Purchase EPUB/MOBI Versions of Book

TBM 27/53: The Lure of New Features and Products

Quick note of gratitude as we roll into the second half of 2020. This year has been so incredibly hard. I feel so lucky to have this outlet. Thank you.

Here's one of the key incentives for running a feature factory (especially in B2B):

Shipping shiny new features is easier than fixing underlying product issues.

What do I mean by easier ?

New features (and products, even) get everyone excited. Customers will get that new thing they've been requesting for a while. Greenfield things are fun to work on, and to market, and to sell. And they are actually easier from a product development perspective. New stuff is less complex, less intertwined, and easier to wrap your head around.

When I ask teams to list their flops/misses with regards to new stuff they struggle to come up with examples. We have a way of making sure the new stuff "wins" (at least from an optics perspective). The same is not true for optimizing existing parts of the product, and addressing underlying issues. That's hard work. It's messy work. Misses abound.

We equate innovation to the new, not to improving the existing. Even when improving the existing is harder and more challenging in many cases.

So we have incentives to keep adding and adding, while avoiding fixing and optimizing. It is way easier to ship 5 "MVP" features than to make sure the first one works.

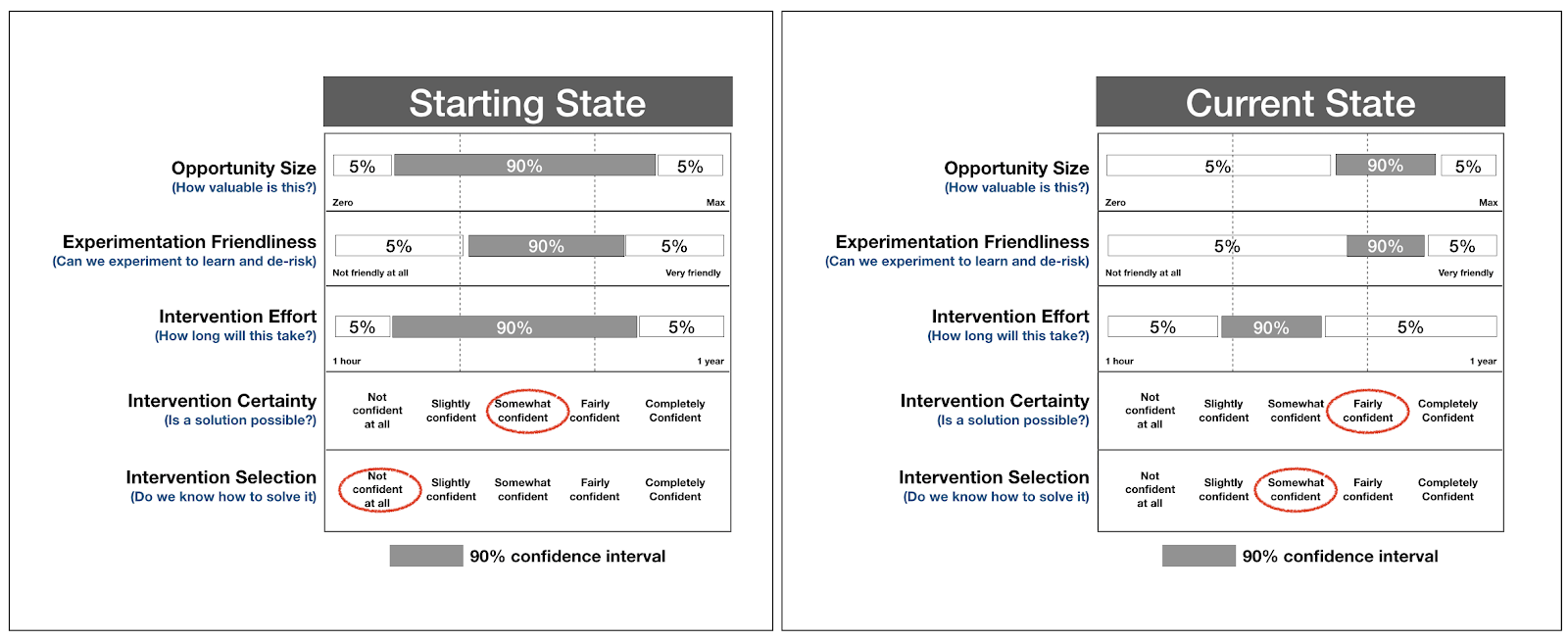

So what? If it makes money, this is a fine strategy, right?